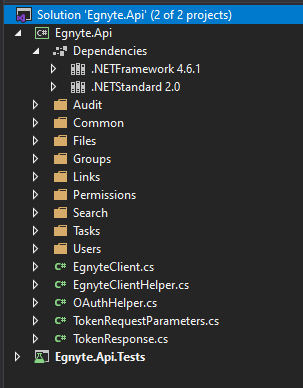

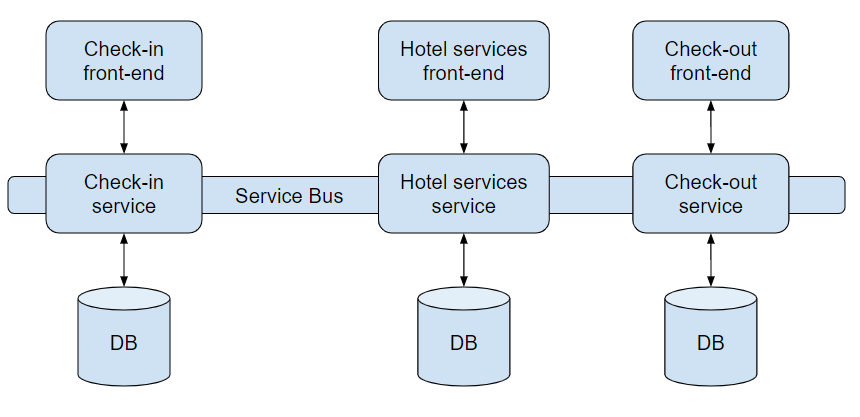

A few years back at Guestline, we decided to build our own payment integration, that we can offer to our clients with benefits to us and savings for the hotels.

The need for a new design

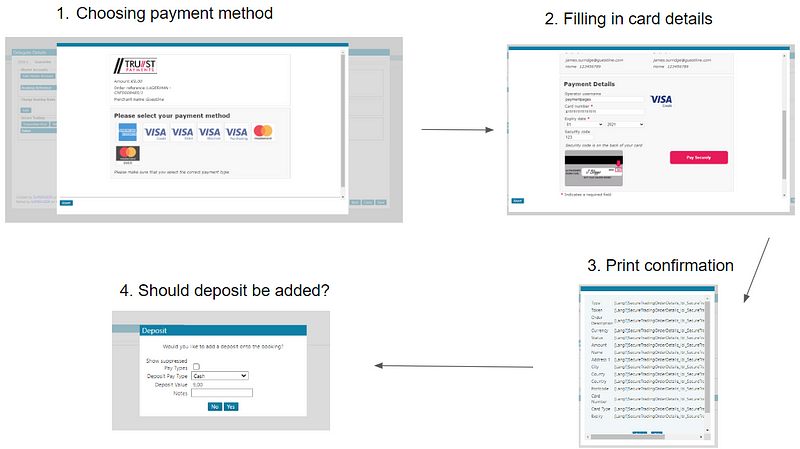

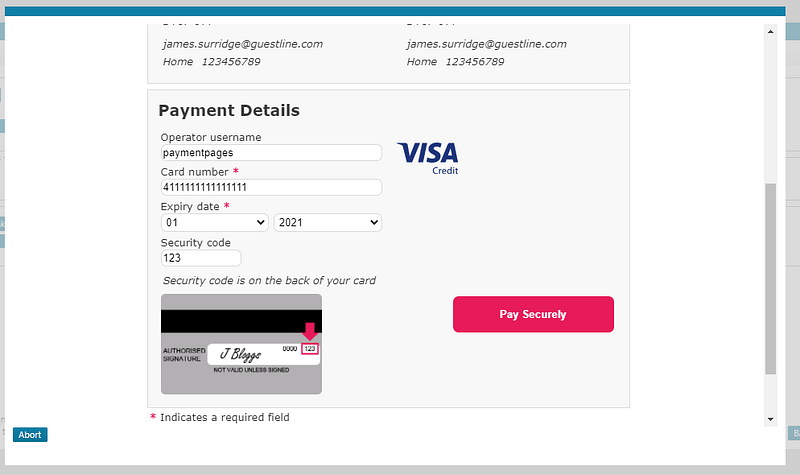

With the new integrations, we initially worked on the back-end part of the puzzle, but later on, we tackled front-end as well. Our current design had its glory days behind and we know we need to refresh it deeply. Let me show you a small part of the process, which is filling in card details in order to conduct a transaction. It happens when a client is making a reservation over the phone, and a receptionist is making an initial payment with given card details.

This design is far away from perfect and when it comes to the user experience it’s nothing that we came across in modern payment integrations. We knew that the first two steps could be merged together but what about the other two? Are they needed?

We need more data

We asked those questions to various people, starting from developers, through product owners and support, and ending with clients. What was the answer?

We probably don’t need this, but it has always been like that.

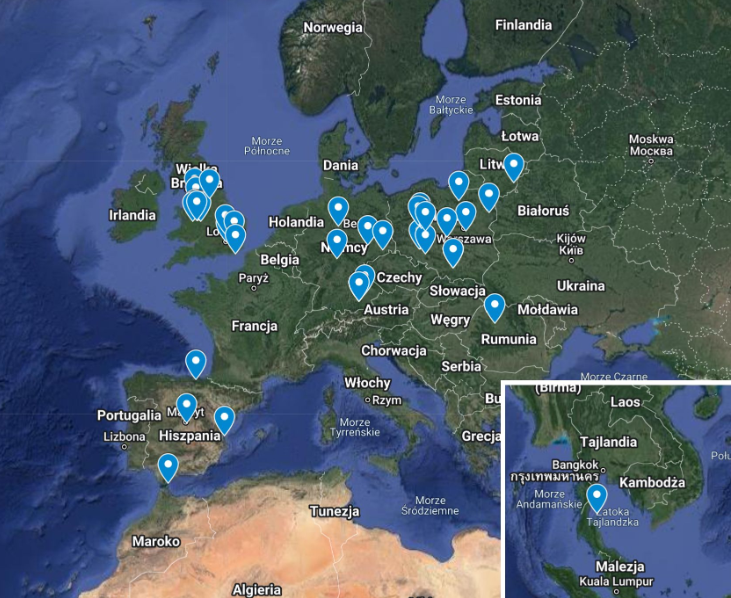

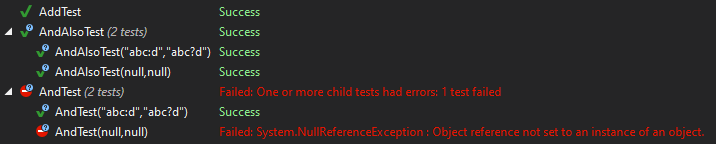

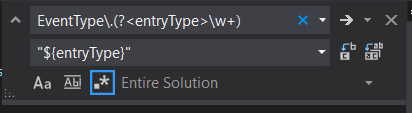

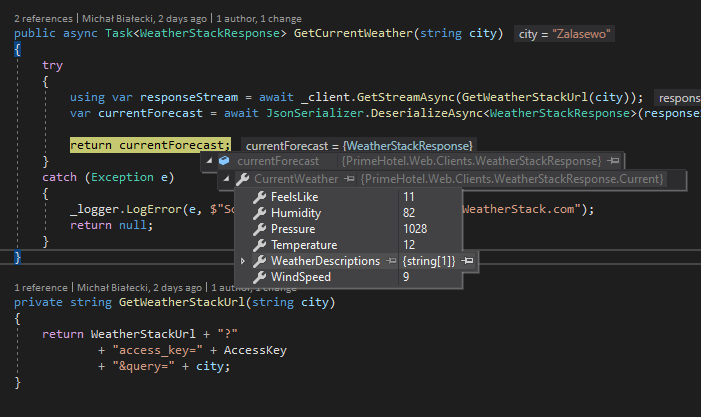

We knew we have to measure it ourselves and here is the part where we used Azure Application Insights. We added logging on every step to figure out how users use our application. This stuff can take time if you don’t have the data you need and you need to add measurements yourself. Nevertheless, after a few weeks, we had our answer.

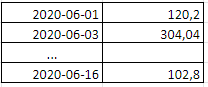

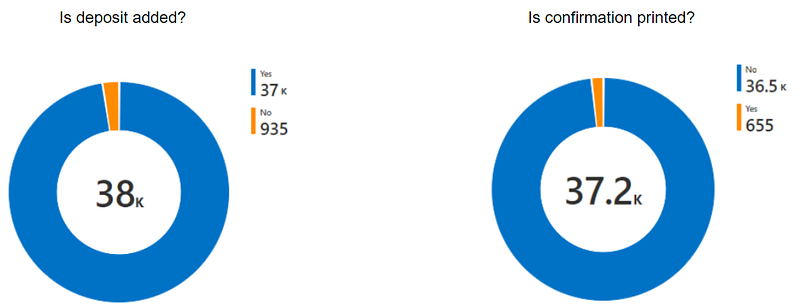

As you see, 97% of times deposit is added and 98% of times, the user doesn’t print the payment confirmation. At this point, we were almost certain that we can make those steps automatic, but we needed to ask those few percent a question.

Why do you use out payment integration like that?

We jumped into calls to our clients and found out, that mostly they just did it as a habit, without any specific purpose. Having that certainty we decided that now, our payment process could be vastly improved.

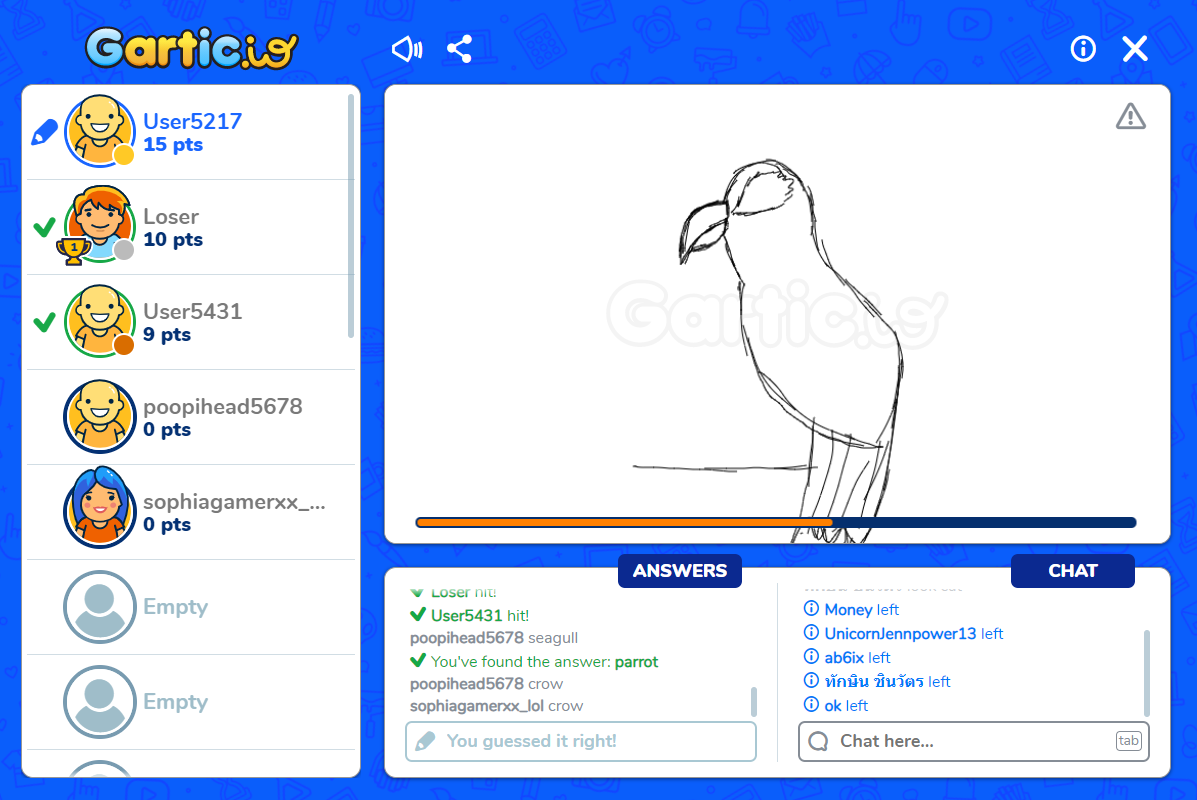

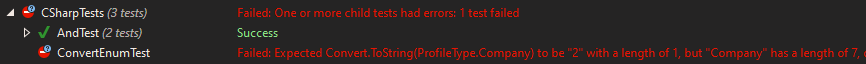

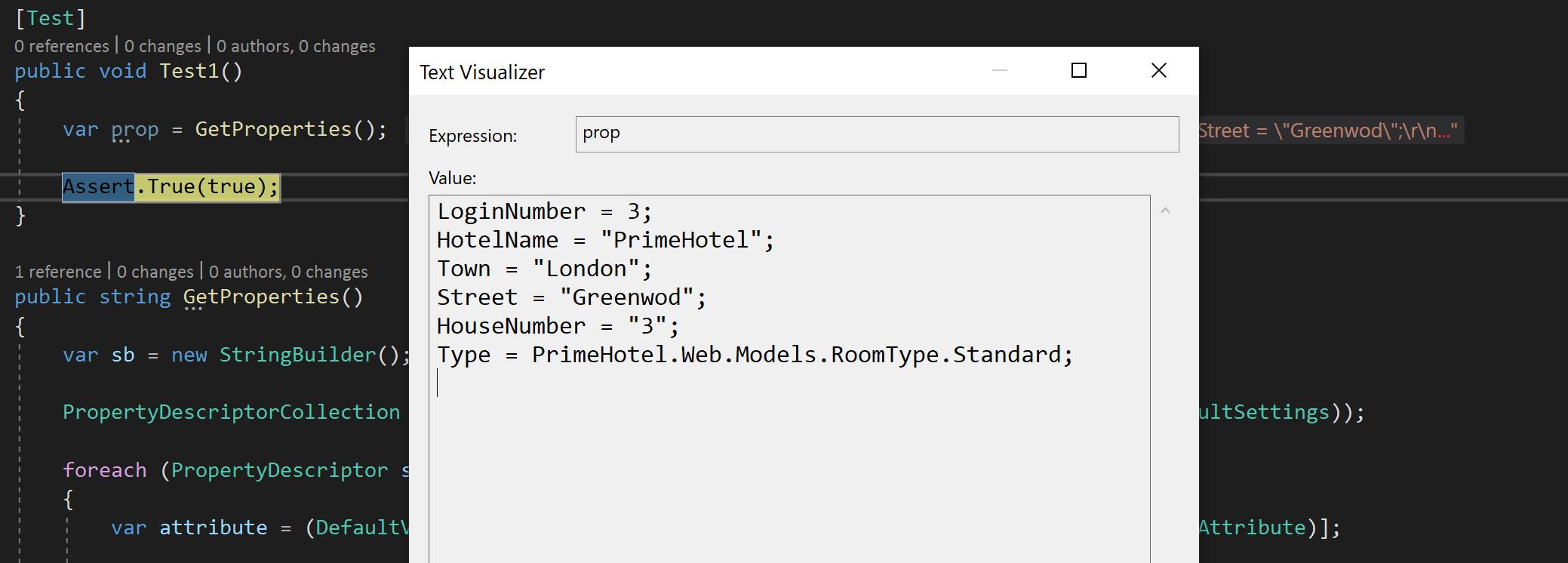

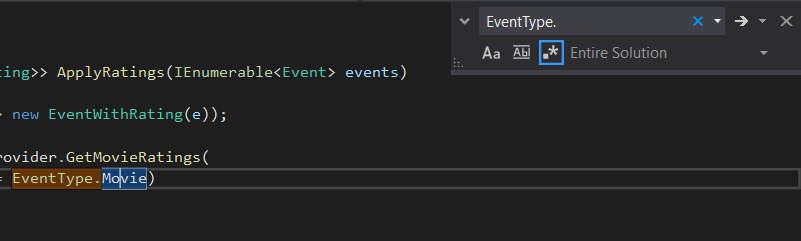

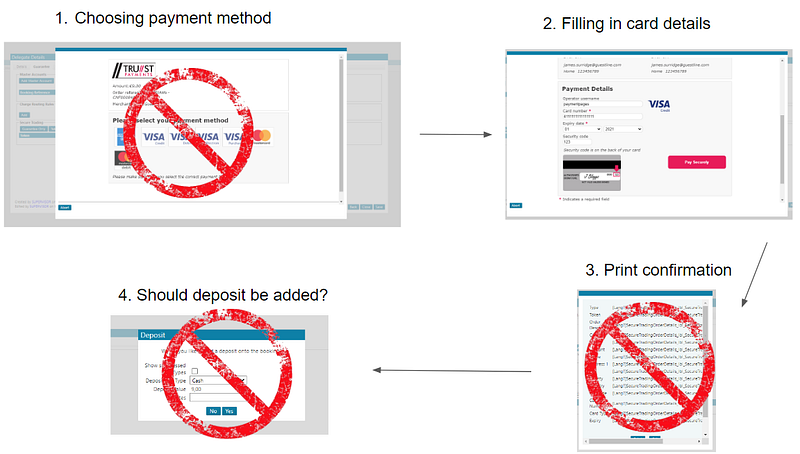

Now we can merge the first two steps and make the last two automatic. The next thing we did was improve on user experience when filling in card details. Here is how it looked before:

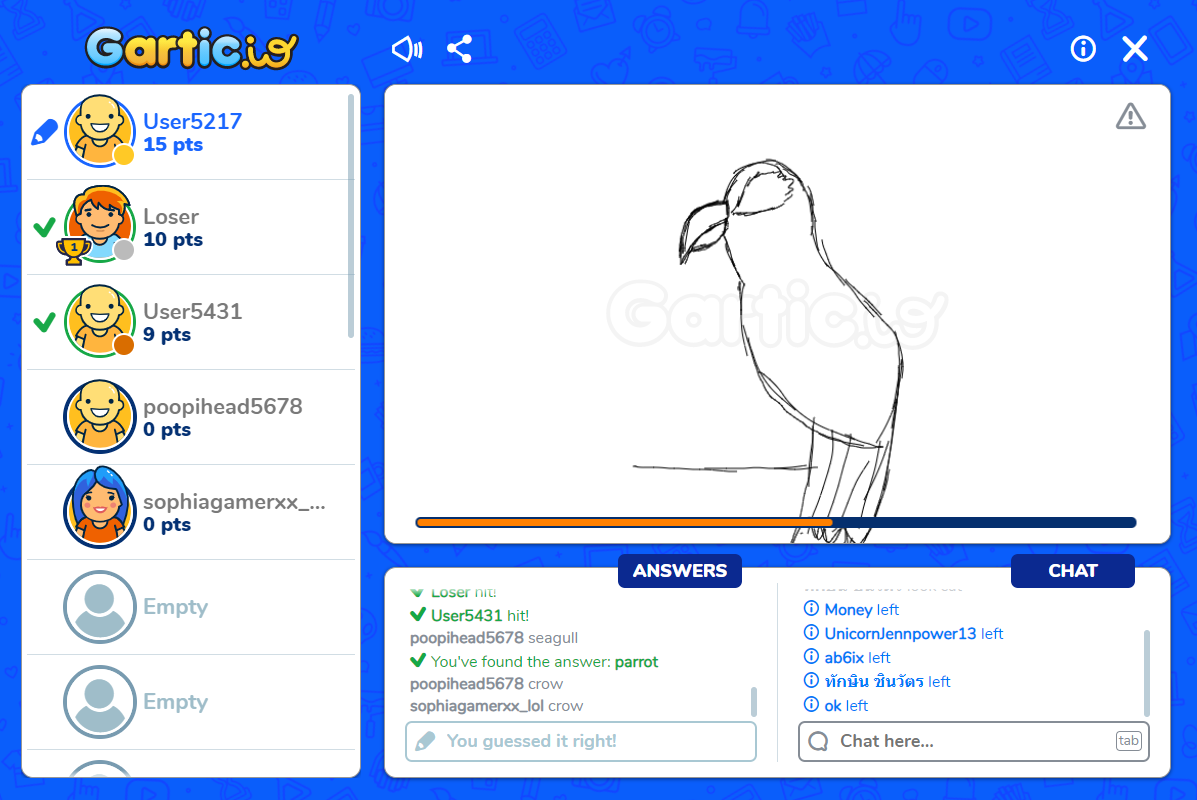

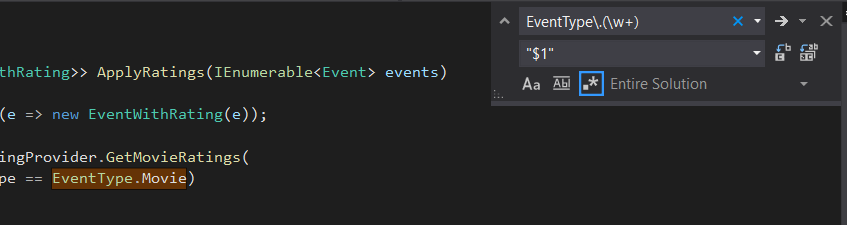

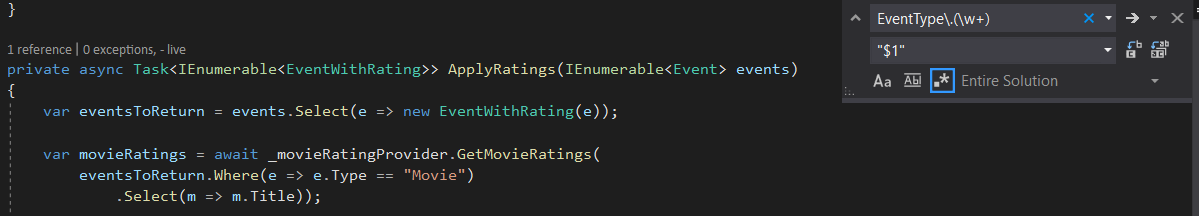

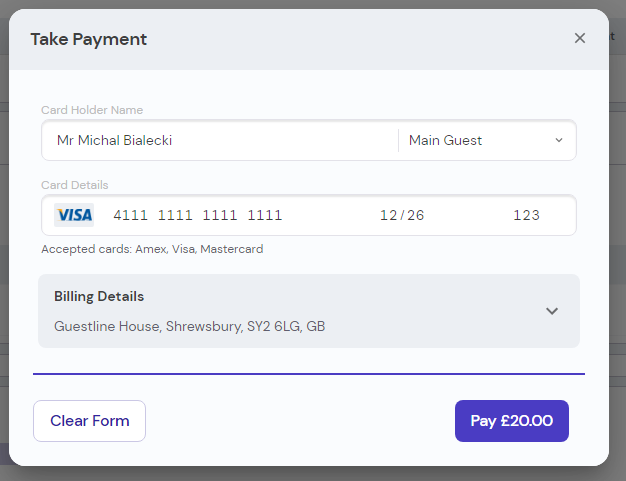

And now the GuestPay version:

This is a single window that the user is going to see and the billing details will be prefilled from the reservation. The card type will be shown based on the card number.

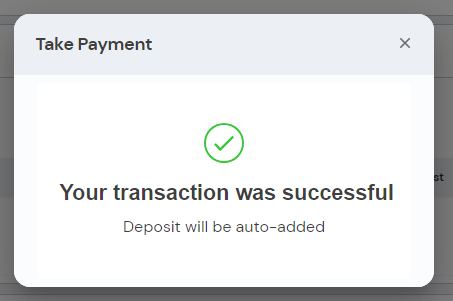

Once the payment is completed, the notification will be shown to inform the user, that the payment was successful and the deposit will be auto-added.

Rolling out the change

Obviously, this is a massive change for the user, so it has to be introduced gradually. We introduced a feature toggle, that we can turn ON or OFF for each hotel separately. We started with just a few sites for a beta rollout and then based on users’ feedback, we added tweaks to the product.

We also put stress on how intuitive the new payment integration is and if the users can complete the payment without confusion or any guidelines from our side. We wanted to minimize the need for training and a potential rise of support calls regarding payments. This is really important when having around 4000 hotels using our product. Any confusion can bring hundreds of support calls, which we really don’t want to generate.

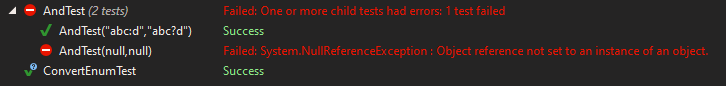

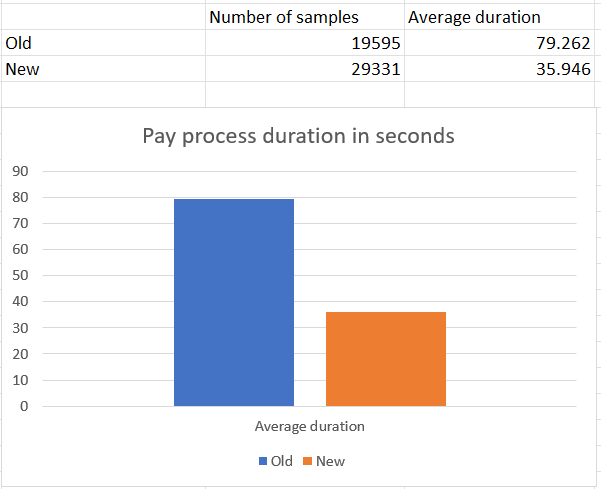

Once we had that confidence that the process works, we could switch more hotels and monitor how users use it. That is also the part where Application Insights came in handy. One of the metrics that were important for us is pay process duration.

We were able to reduce the pay process by half! From 79 seconds to 35 seconds on average. If we multiply that by the number of payments, we get 44 seconds * 29331 = 1290564 seconds, that’s over 358 hours every 7 days.

We were able to save over 51 hours of guest time every day.

That’s 51 hours of receptionist time that can be used to serve guests and 51 hours of guest’s time not spent on a call to the hotel. With hotel difficulties in hiring staff, every process that can be sped up or made automatic is essential.

Summary

When introducing a change in the legacy system, data collection is crucial. You can ask your clients for feedback but that would always be subjective. Statistics don’t lie, but you need to ask the right questions and interpret them correctly. Remember that a man with a dog has on average 3 legs each.

Gathering data to guide development change will improve your product utility. Getting feedback from the client is one of the hardest things to achieve, but with the proper measurements, you can generate that feedback yourself. This could answer the question of whether a change is progressing in the right direction or give you a hint of what needs to be closely looked at.