Once in a while, you get a task, that you need to generate an export file to the 3rd party system. It is popular when communicating with price comparer services, search services, adds services etc. So you need to generate csv file with almost the same data, but in a slightly different format. How to solve it, when you don’t want to write everything separately? How to write you code so that you can make changes very easy?

Once in a while, you get a task, that you need to generate an export file to the 3rd party system. It is popular when communicating with price comparer services, search services, adds services etc. So you need to generate csv file with almost the same data, but in a slightly different format. How to solve it, when you don’t want to write everything separately? How to write you code so that you can make changes very easy?

Let’s start with a simple csv file export

CSV acronym stands for comma separated values, where usually there are column names on the first line. Let’s create a very simple API, that will return products in CSV format. Here is a controller:

[Route("api/Export")]

public class CsvExportController : Controller

{

private readonly ICsvExport _csvExport;

public CsvExportController(ICsvExport csvExport)

{

_csvExport = csvExport;

}

[Route("Products")]

[HttpGet]

public IActionResult Products()

{

var data = _csvExport.ReturnData();

var stream = new MemoryStream(Encoding.UTF8.GetBytes(data));

var result = new FileStreamResult(stream, "text/plain");

result.FileDownloadName = "export_" + DateTime.Now + ".csv";

return result;

}

}

Data here are returned in a string, that is composed of headers in the first line and formatted rows as products.

public class SimpleCsvExport : ICsvExport

{

private readonly IProductGenerator _productGenerator;

public SimpleCsvExport(IProductGenerator productGenerator)

{

_productGenerator = productGenerator;

}

public string ReturnData()

{

var columnNames = GetColumnNames();

var builder = new StringBuilder();

builder.AppendJoin(";", columnNames);

builder.AppendLine();

foreach (var product in _productGenerator.GenerateProducts(100))

{

var values = GetValues(product);

builder.AppendJoin(";", values);

builder.AppendLine();

}

return builder.ToString();

}

private string[] GetColumnNames()

{

return new[] {

"Id",

"Name",

"ReferenceNumber",

"ProducerName",

"QuantityAvailable",

"QuantitySoldLastMonth",

"Weight",

"Price",

"LastOrderDate"};

}

private string[] GetValues(ProductDto product)

{

return new[]

{

product.Id,

product.Name,

product.ReferenceNumber,

product.ProducerName,

product.QuantityAvailable.ToString(),

product.QuantitySoldLastMonth.ToString(),

product.Weight.ToString(),

product.Price.ToString(),

product.LastOrderDate.ToString()

};

}

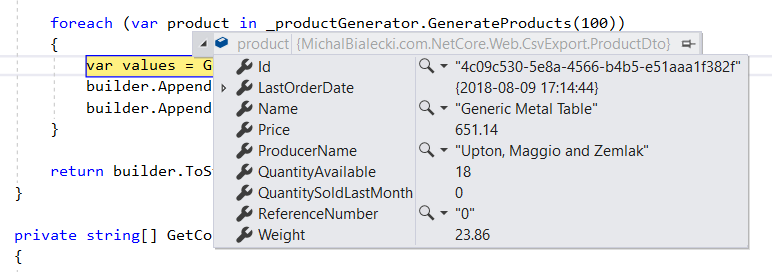

Generating the data

You probably noticed, that there is a part missing, which generates products. I didn’t want to write to much code, but I’d like my data to be rather relevant and similar to the real life scenario. I followed this StackOverflow question: https://stackoverflow.com/questions/6625490/c-sharp-library-to-populate-object-with-random-data and installed Bogus nuget package.

It is a package that is suited for generating test data and it fits perfectly into my scenario. My ProductGenerator looks like this:

public class ProductGenerator : IProductGenerator

{

public List<ProductDto> GenerateProducts(int count)

{

var productGenerator = new Faker<ProductDto>()

.RuleFor(p => p.Id, v => Guid.NewGuid().ToString())

.RuleFor(p => p.Name, v => v.Commerce.ProductName())

.RuleFor(p => p.ReferenceNumber, v => v.IndexGlobal.ToString())

.RuleFor(p => p.ProducerName, v => v.Company.CompanyName())

.RuleFor(p => p.QuantityAvailable, v => v.Random.Number(0, 100))

.RuleFor(p => p.QuantitySoldLastMonth, v => v.Random.Number(0, 20))

.RuleFor(p => p.Weight, v => Math.Round(v.Random.Decimal(0.1m, 50), 2))

.RuleFor(p => p.Price, v => Math.Round(v.Random.Decimal(1, 10000), 2))

.RuleFor(p => p.LastOrderDate, v => v.Date.Recent());

return productGenerator.Generate(count);

}

}

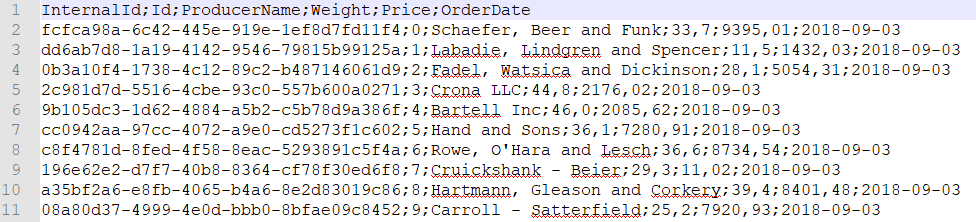

Notice that there are numerous of possibilities to generate data, divided into fields, like Random, Date, Commerce or Company. It is fast and easy to use. This is the result I get in return:

Nice and easy right? I can get relevant data in just a few lines, brilliant!

Making export generic

Let’s imagine that we have to make a couple of exports and they would have the same data, but formatted differently, sorted in a different way. What if we could introduce a series of attributes on a ProductDto class, that would define those custom features?

With a custom ExportAttribute and simple ProductAnalyticsAttribute that looks like this:

[AttributeUsage(AttributeTargets.Property | AttributeTargets.Class, AllowMultiple = true)]

public abstract class ExportAttribute : Attribute

{

public string ExportName { get; set; }

public string Format { get; set; }

public int Order { get; set; }

}

public class ProductAnalyticsAttribute : ExportAttribute

{

}

We can have our ProductDto configured for many exports:

public class ProductDto

{

[ProductComparerExport(ExportName = "InternalId")]

[ProductAnalytics(Order = 1)]

public string Id { get; set; }

[ProductAnalytics(Order = 3)]

public string Name { get; set; }

[ProductComparerExport(ExportName = "Id")]

[ProductAnalytics(Order = 2)]

public string ReferenceNumber { get; set; }

[ProductComparerExport]

[ProductAnalytics(Order = 4)]

public string ProducerName { get; set; }

[ProductAnalytics(Order = 5)]

public int QuantityAvailable { get; set; }

[ProductAnalytics(Order = 6)]

public int QuantitySoldLastMonth { get; set; }

[ProductComparerExport(Format = "0.0")]

public decimal Weight { get; set; }

[ProductComparerExport(Format = "0.00")]

[ProductAnalytics(Order = 7, Format = "0.00")]

public decimal Price { get; set; }

[ProductComparerExport(ExportName = "OrderDate", Format = "yyyy-MM-dd")]

[ProductAnalytics(Order = 8, Format = "MM-dd-yyyy")]

public DateTime LastOrderDate { get; set; }

}

Isn’t it perfect? We can mark properties in our ProductDto, that need to appear in the export. We can define, format, specify an order in which they should appear. Configuring is an easy part, but the meat should be generic and exactly the same for every export. Therefore, we need to work with basic ExportAttribute in out generic classes.

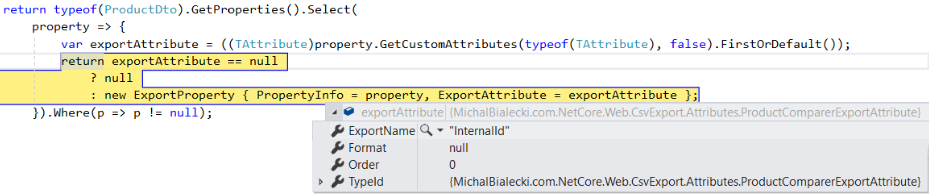

Getting custom attributes configuration

First very important line is for getting properties of our type. This code will list every one of them:

typeof(ProductDto).GetProperties()

Second important line is code that returns instance of our custom attribute if there is one:

var exportAttribute = ((TAttribute)property.GetCustomAttributes(typeof(TAttribute), false).FirstOrDefault());

So now I can use my custom attribute values to get column names for CSV export:

private IEnumerable<ExportProperty> GetColumns<TAttribute>()

where TAttribute : ExportAttribute

{

return typeof(ProductDto).GetProperties().Select(

property => {

var exportAttribute = ((TAttribute)property.GetCustomAttributes(typeof(TAttribute), false).FirstOrDefault());

return exportAttribute == null

? null

: new ExportProperty { PropertyInfo = property, ExportAttribute = exportAttribute };

}).Where(p => p != null);

}

In PropertyInfo I have data about my property like type, value and attributes – it will help me later to get property value for the next rows. In ExportAttributes I have values of my current attribute, which is ProductComparerExportAttribute.

Getting product values for rows with data is really simple when everything is ready.

private List<string> GetProductValues<TAttribute>(ProductDto product, IEnumerable<ExportProperty> columns)

where TAttribute : ExportAttribute

{

var propertyValues = new List<string>();

foreach (var column in columns)

{

propertyValues.Add(GetAttributeValue(product, column.PropertyInfo, column.ExportAttribute));

}

return propertyValues;

}

For every product, I need to iterate through its columns (but only those that are marked with my current custom attribute) and fetch values. Fetching could take one line, but I wanted to implement something more. Notice check for IFormattable and formatting code. I can format everything that can format it’s value with simple ToString(“some format”). It is decimal, int, DateTime, etc.

private string GetAttributeValue<TAttribute>(ProductDto product, PropertyInfo propertyInfo, TAttribute attribute)

where TAttribute : ExportAttribute

{

object value = propertyInfo.GetValue(product);

if (value == null || attribute == null)

{

return string.Empty;

}

if (!string.IsNullOrWhiteSpace(attribute.Format) && value is IFormattable)

{

return (value as IFormattable).ToString(attribute.Format, CultureInfo.CurrentCulture);

}

if (!string.IsNullOrWhiteSpace(attribute.Format))

{

return string.Format(attribute.Format, value);

}

return propertyInfo.GetValue(product).ToString();

}

The sample output file looks like this:

Adding new export with that generic implementation requires minimal code changes. It literally took me no more than 10 minutes for testing purposes. It is just creating a new custom attribute, decorate ProductDto with it and a creating service that calls export. This is how code can be written with thinking about future changes. Usage of generics here is a huge benefit – I created one class that works for all exports. It might not be straightforward to read, but surely it is worth implementing.

You can find the whole code on my GitHub – it is a bit too big to quote it all: https://github.com/mikuam/Blog/

If you like deep backend stuff, you can check my post about Microsoft Orleans – an implementation of actor framework: https://www.michalbialecki.com/2018/03/05/getting-started-microsoft-orleans/

Have a good day;)

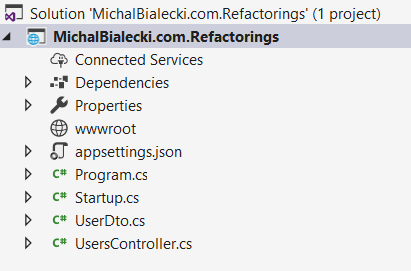

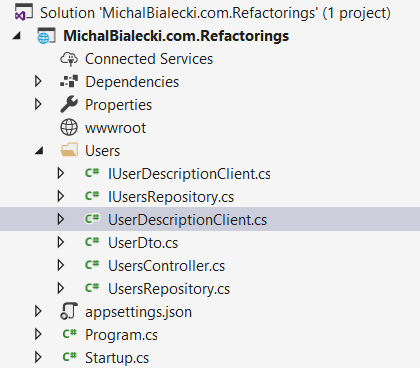

Recently in my team at work, we focus on maintaining older micro-services. While this might not be the most exciting job to do, it is an opportunity to work on a developer craftsmanship. A micro-service or any code that you write, can be old after a year or even a half, cause our developer habits changes. Not only technology goes forward, but we tend to use different nuget packages and in result write the same code in a different way.

Recently in my team at work, we focus on maintaining older micro-services. While this might not be the most exciting job to do, it is an opportunity to work on a developer craftsmanship. A micro-service or any code that you write, can be old after a year or even a half, cause our developer habits changes. Not only technology goes forward, but we tend to use different nuget packages and in result write the same code in a different way.

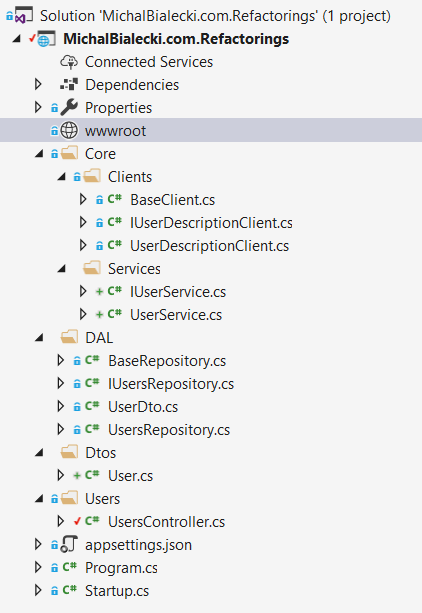

My refactored project is better designed and decoupled, but you never know which direction project might go. It is a threat when implementing completely new micro-service. You can implement whatever you want in the beginning, but you want to implement as much as possible so that the next developer will have an easier job to do. But would it be really easier? Trying to figure out why you wrote so much code for such a little value. In fact reading and understanding bigger project just takes more time than it should.

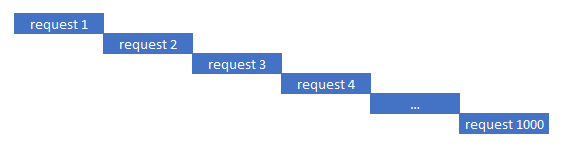

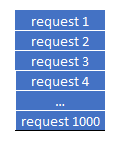

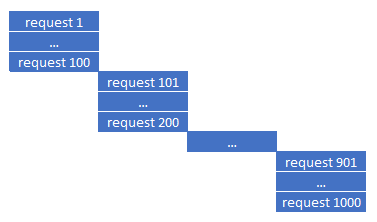

My refactored project is better designed and decoupled, but you never know which direction project might go. It is a threat when implementing completely new micro-service. You can implement whatever you want in the beginning, but you want to implement as much as possible so that the next developer will have an easier job to do. But would it be really easier? Trying to figure out why you wrote so much code for such a little value. In fact reading and understanding bigger project just takes more time than it should. Recently I run into a challenge. I’ working on a micro-service that receives and processes messages one by one. How to send Service Bus messages, not instantly, but when they pile up? The reason for cause it is expensive when it comes to performance. Let’s send messages after 10 piles up or after every 20 seconds.

Recently I run into a challenge. I’ working on a micro-service that receives and processes messages one by one. How to send Service Bus messages, not instantly, but when they pile up? The reason for cause it is expensive when it comes to performance. Let’s send messages after 10 piles up or after every 20 seconds. The thing is that sooner or later timer is destroyed by a garbage collector. Even when I tried to save the reference to timer or use GC.KeepAlive(timer), it always got cleared.

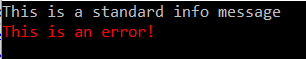

The thing is that sooner or later timer is destroyed by a garbage collector. Even when I tried to save the reference to timer or use GC.KeepAlive(timer), it always got cleared. There’s one little trick to it. Notice I used \r character, that moves caret back to the beginning of the line, where next write overrides last one. It’s a simple animation!

There’s one little trick to it. Notice I used \r character, that moves caret back to the beginning of the line, where next write overrides last one. It’s a simple animation!

Cool, isn’t it? With your imagination and thousands of Unicode characters possibilities are limitless.

Cool, isn’t it? With your imagination and thousands of Unicode characters possibilities are limitless.

Colorful.Console overrides original console, so it does have all existing methods, but it extends original object with new possibilities. This is important, because you can start using this console in your old code, but introduce color only where you want it.

So let’s do some animation with color. This is super easy and a lot of fun.

Colorful.Console overrides original console, so it does have all existing methods, but it extends original object with new possibilities. This is important, because you can start using this console in your old code, but introduce color only where you want it.

So let’s do some animation with color. This is super easy and a lot of fun.

This nuget package offers even more, like colorful text. You can use ASCII fonts to write text. You can download fonts

This nuget package offers even more, like colorful text. You can use ASCII fonts to write text. You can download fonts  Full tutorial is available here:

Full tutorial is available here:

Go or GoLang is an open source programming language. It’s a server-side C like language created by Google. I won’t take much or your time to introduce you to the language, but here is a short summary why it’s worth trying.

Go or GoLang is an open source programming language. It’s a server-side C like language created by Google. I won’t take much or your time to introduce you to the language, but here is a short summary why it’s worth trying.