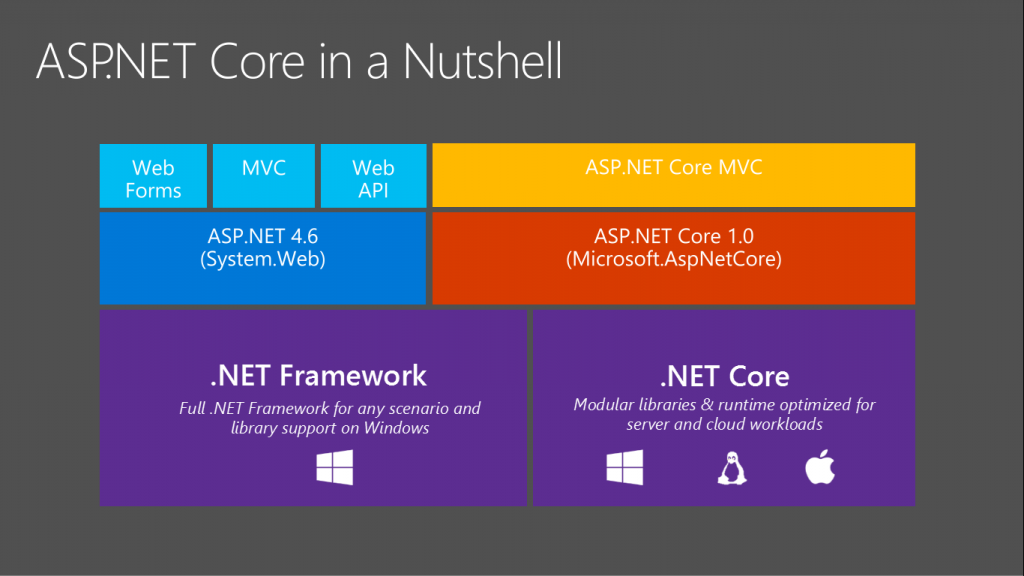

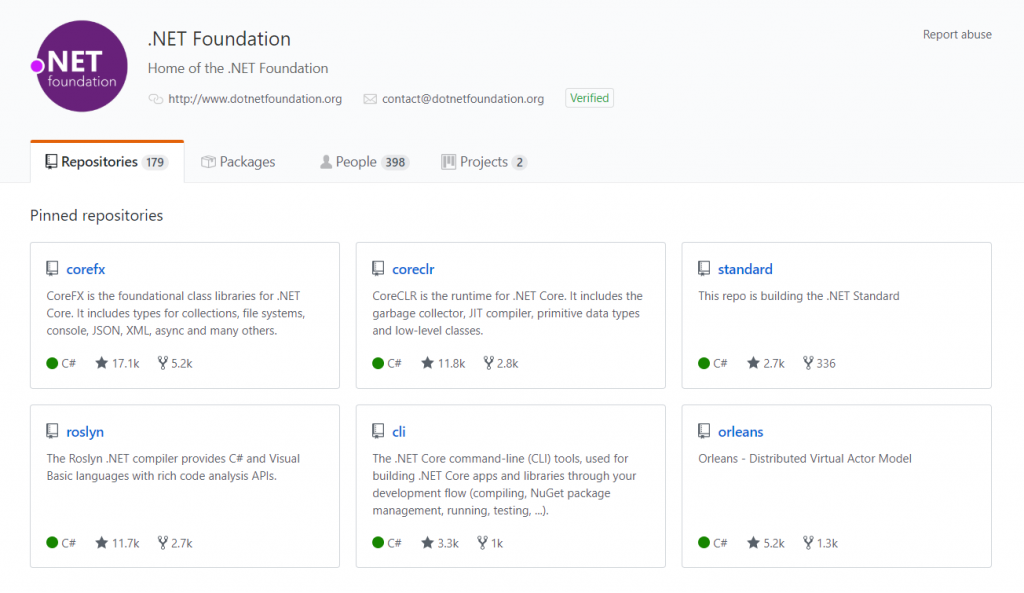

I love .net core. It is an awesome concept and a great, light framework to work with. One essential part of the framework environment is a .Net Core CLI. It’s a set of cross-platform tools and commands that can create, build and publish you app. Along with the platform comes also Global Tools, a concept of a console application, that can be distributed as a nuget package. Today, I’m going to show you how to make one.

Creating a Global Tool

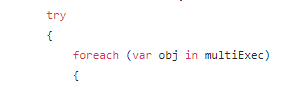

Creating a console application in .net core is trivial, but creating a Global Tools for me – wasn’t. I tried a simple way: create a console application and then make some amendments to make it a tool. It didn’t work out, so today I’m going to show you how to do it differently.

The first thing you would need is templates package. .Net Core does not support to create a global tool from a command, so you need to install it.

dotnet new –install McMaster.DotNet.GlobalTool.Templates

Once you do it, you can just create a new project:

dotnet new global-tool –command-name MichalBialecki.com.MikTrain

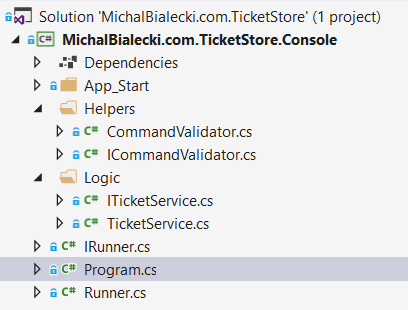

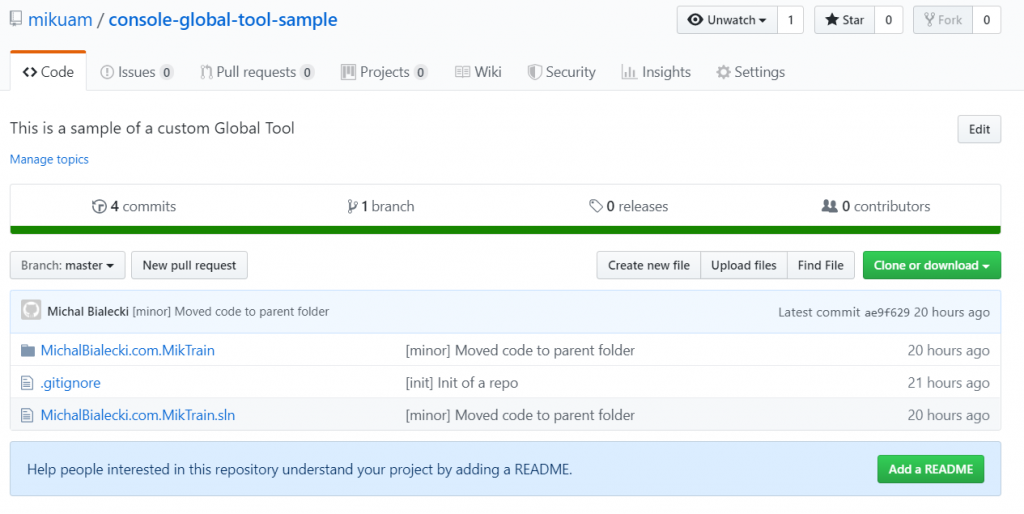

That worked perfectly and after just a few changes to Program.cs file, my tool was ready. You can check it out in my repo:

Next step is to create a nuget package. There are a few ways to do that, but I’ll go with the simplest one – using a command.

dotnet pack –output ./

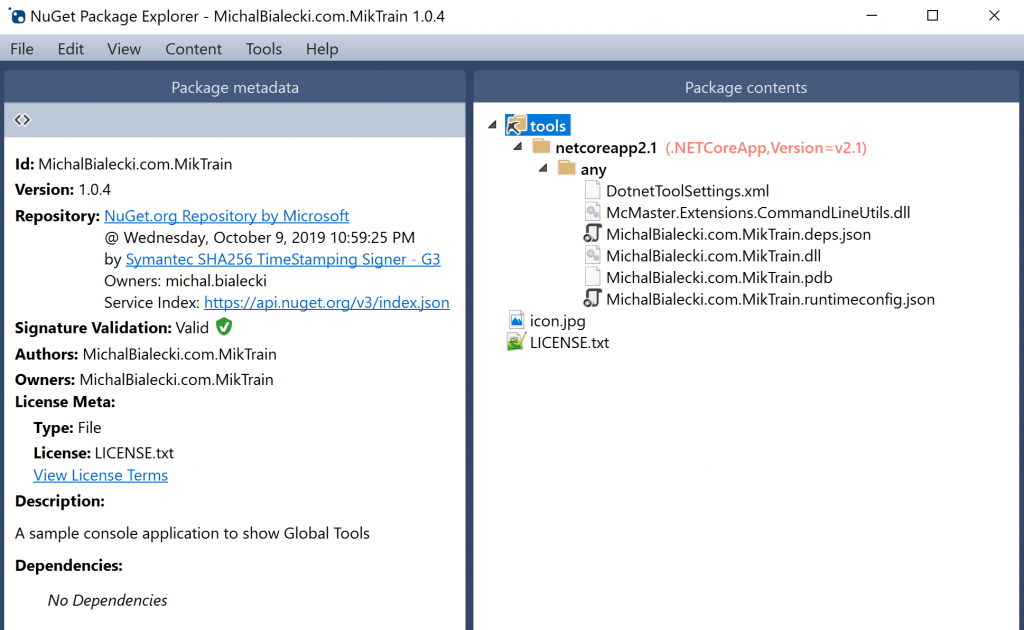

After the build, I have a package, but it’s not yet ready to be sent to nuget.org. It misses a license and icon to pass the validation. I end up adding those files and edit metadata, to get it to pass through. To have a more visual look at things, I’m using NuGet Package Explorer. When I open MichalBialecki.com.MikTrain.1.0.4.nupkg I will see:

My nuspec file looks like this:

<?xml version="1.0" encoding="utf-8"?> <package xmlns="http://schemas.microsoft.com/packaging/2012/06/nuspec.xsd"> <metadata> <id>MichalBialecki.com.MikTrain</id> <version>1.0.1</version> <authors>Michał Białecki</authors> <owners>Michał Białecki</owners> <requireLicenseAcceptance>false</requireLicenseAcceptance> <license type="file">LICENSE.txt</license> <icon>icon.jpg</icon> <description>A sample console application to show Global Tools</description> <packageTypes> <packageType name="DotnetTool" /> </packageTypes> <dependencies> <group targetFramework=".NETCoreApp2.2" /> </dependencies> </metadata> </package>

You might get some errors…

If you encounter NU1212 error, it might mean that you are missing packageType tag in your nuspec file. Check the one above.

If you encounter NU1202 error, you’re probably missing Microsoft.NETCore.Platforms dependency.

For more hints, go to this great article.

I added my package by the website and after the package is verified, you will see:

If it’s not available right away, you probably need to wait couple of hours. At least I had to 🙂

Installing an running an application

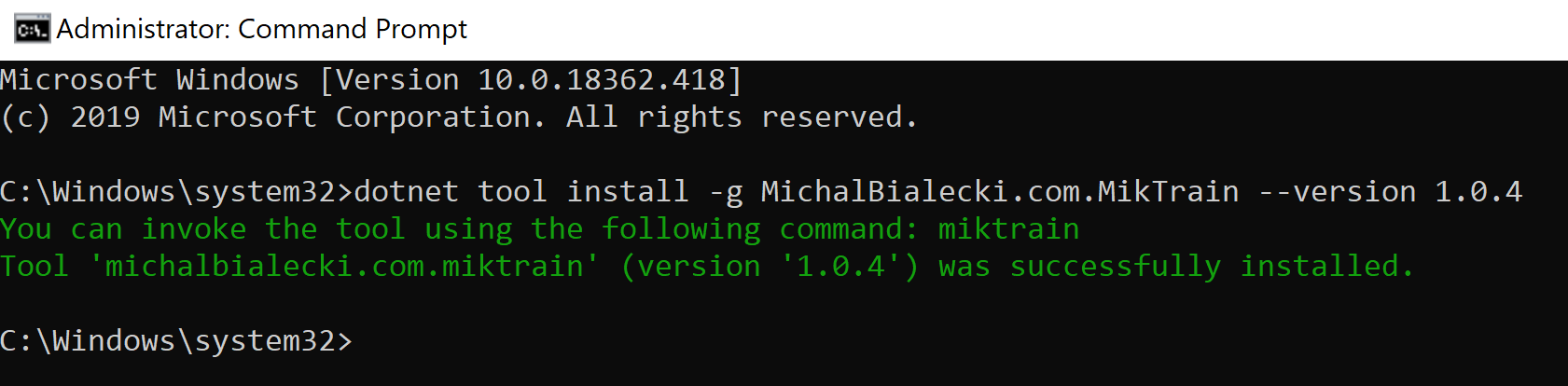

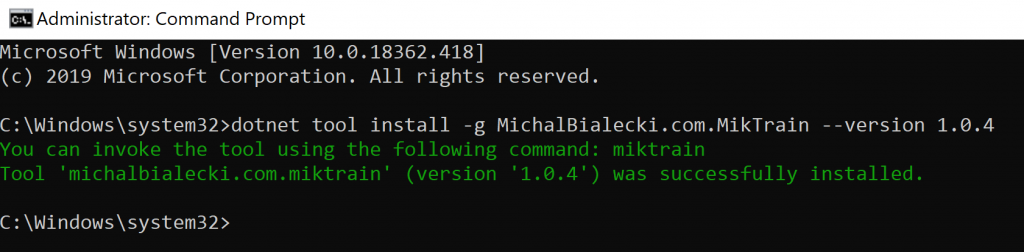

One nuget package is validated and checked by nuget.org, you can try to install you app. To do it, you need to use the command:

dotnet tool install -g MichalBialecki.com.MikTrain –version 1.0.4

Installing my tool gave me this result:

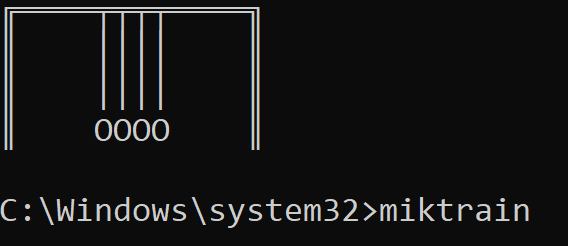

And now I can run my app with command: miktrain

What is it for?

A global tool is a console application that can be a handy tool. I’m sure that you have some of those in your organization. The power in Global Tools is the ability to install update from nuget package. Let’s say something changed in a tool and you just want the newest version. You just need to execute a command:

dotnet tool update -g miktrain

And your app is up to date. It now can be shared across the team and updates can be spread much more elegant. There’s no longer a need to shave a magic exe file 😛

![]() All code posted here you can find on my GitHub: https://github.com/mikuam/console-global-tool-sample

All code posted here you can find on my GitHub: https://github.com/mikuam/console-global-tool-sample