This is a post on a series about great code review feedback, that I either gave or received. You can go ahead and read the previous ones here: https://www.michalbialecki.com/2019/06/21/code-reviews/

The context

Caching is an inseparable part of ASP.net applications. It is the mechanism that makes our web pages loading blazing fast with a very little code required. However, this blessing comes with responsibility. I need to quote one of my favorite characters here:

Downsides come into play when you’re no longer sure if the data that you see are old, or new. Caches in different parts of your ecosystem can make your app inconsistent, incoherent. But let’s not get into details since it’s not the topic of this post.

Let’s say we have an API, that gets user by id and code looks like this:

[HttpGet("{id}")]

public async Task<JsonResult> Get(int id)

{

var user = await _usersRepository.GetUserById(id);

return Json(user);

}

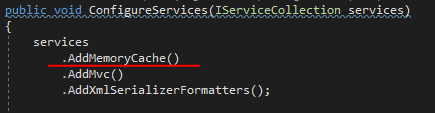

Adding in-memory caching in .net core is super simple. You just need to add one line in the Startup.cs

And then pass IMemoryCache interface as a dependency. Code with in-memory caching would look like this:

[HttpGet("{id}")]

public async Task<JsonResult> Get(int id)

{

var cacheKey = $"User_{id}";

if(!_memoryCache.TryGetValue(cacheKey, out UserDto user))

{

user = await _usersRepository.GetUserById(id);

_memoryCache.Set(cacheKey, user, TimeSpan.FromMinutes(5));

}

return Json(user);

}

Review feedback

Why don’t you use IDistributedCache? It has in-memory caching support.

Explanation

Distributed cache is a different type of caching, where data are stored in an external service or storage. When your application scales and have more than one instance, you need to have your cache consistent. Thus, you need to have one place to cache your data for all of your app instances. .Net net code supports distributed caching natively, by IDistributedCache interface.

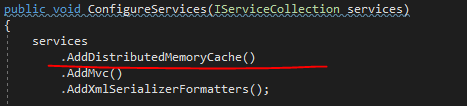

All you need to do is to change caching registration in Startup.cs:

And make a few modifications in code using the cache. First of all, you need to inject IDistributedCache interface. Also remember that your entity, in this example UserDto, has to be annotated with Serializable attribute. Then, using this cache will look like this:

[HttpGet("{id}")]

public async Task<JsonResult> Get(int id)

{

var cacheKey = $"User_{id}";

UserDto user;

var userBytes = await _distributedCache.GetAsync(cacheKey);

if (userBytes == null)

{

user = await _usersRepository.GetUserById(id);

userBytes = CacheHelper.Serialize(user);

await _distributedCache.SetAsync(

cacheKey,

userBytes,

new DistributedCacheEntryOptions { SlidingExpiration = TimeSpan.FromMinutes(5) });

}

user = CacheHelper.Deserialize<UserDto>(userBytes);

return Json(user);

}

Using IDistributedCache is more complicated, cause it doesn’t support strongly types and you need to serialize and deserialize your objects. To not mess up my code, I created a CacheHelper class:

public static class CacheHelper

{

public static T Deserialize<T>(byte[] param)

{

using (var ms = new MemoryStream(param))

{

IFormatter br = new BinaryFormatter();

return (T)br.Deserialize(ms);

}

}

public static byte[] Serialize(object obj)

{

if (obj == null)

{

return null;

}

var bf = new BinaryFormatter();

using (var ms = new MemoryStream())

{

bf.Serialize(ms, obj);

return ms.ToArray();

}

}

}

Why distributed cache?

Distributed cache has several advantages over other caching scenarios where cached data is stored on individual app servers:

- Is consistent across requests to multiple servers

- Survives server restarts and app deployments

- Doesn’t use local memory

Microsoft’s implementation of .net core distributed cache supports not only memory cache, but also SQL Server, Redis and NCache distributed caching. It all differs by extension method you need to use in Startup.cs. This is really convenient to have caching in one place. Serialization and deserialization could be a downside, but it also makes it possible to make a one class, that would handle caching for the whole application. Having one cache class is always better then having multiple caches across an app.

When to use distributed memory cache?

- In development and testing scenarios

- When a single server is used in production and memory consumption isn’t an issue

If you would like to know more, I strongly recommend you to read more about:

![]() All code posted here you can find on my GitHub: https://github.com/mikuam/Blog

All code posted here you can find on my GitHub: https://github.com/mikuam/Blog