A .Net Core is a catchphrase that you can hear more and more often in both developer discussions and job offers. You probably already heard that it’s fast, simple and runs on multiple platforms. In this post, I’d like to sum everything up and highlight what I like the most about it.

Why new framework?

Microsoft successfully released a full .Net Framework more than a dozen years ago. In every version, there were new features released. What are the biggest drawbacks?

- it runs and can only be developed on Windows

- it’s not modular, you target a framework as a dependency, rather than multiple packages

- ASP.NET is not fast enough compared to other frameworks

- it’s not open-source, so the community cannot really contribute

Because of that Microsoft needed to introduce something new, that would need to be run on Windows, Linux, be small, fast and modular. This is why .Net Core was introduced. Version 1.0 was developed in parallel to .Net Framework 4.6. It was initially released in the middle of 2016 as a set of open-source libraries hosted on GitHub.

https://codeburst.io/what-you-need-to-know-about-asp-net-core-30fec1d33d78

Cross-platform

Now software written in .Net Core can be run on Windows, Linux and MacOS. What’s even more important, you can also run or host .Net Core apps on mentioned operating systems. So from now on, your ASP.NET website does not need an IIS for hosting, it can now be hosted on Linux. You can even create a docker container out of it and deploy it in the cloud. This is great in terms of maintenance, scalability, and performance. It also means that you no longer need to have Microsoft infrastructure to host your .net app.

Modularity

In .Net Framework new features were added only with a new release. Since in .Net Core every library is a nuget package, your app does not need to be dependent on one library. You can have a different version of System.IO and System.Net.Http.

What’s more you install only basic dependency, that is Microsoft.NETCore.App and other can be installed depending on what you need. This will be reflected in smaller app size.

Open-source

From the very beginning .Net Core was published as a dozen of GitHub repositories. Now there are 179 of those! You are free to take a look at what features are coming in and how the core looks like. It also means that while writing code, you can go inside of a framework method to check out what’s going on underneath.

Around those repositories grew a community eager to develop .Net Core and they are constantly improving it. You can be a part of it! As in any GitHub repository, you can raise an issue if you notice a bug or create a pull-request to propose a solution.

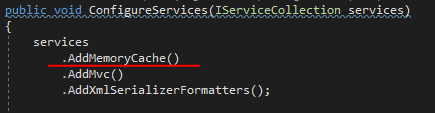

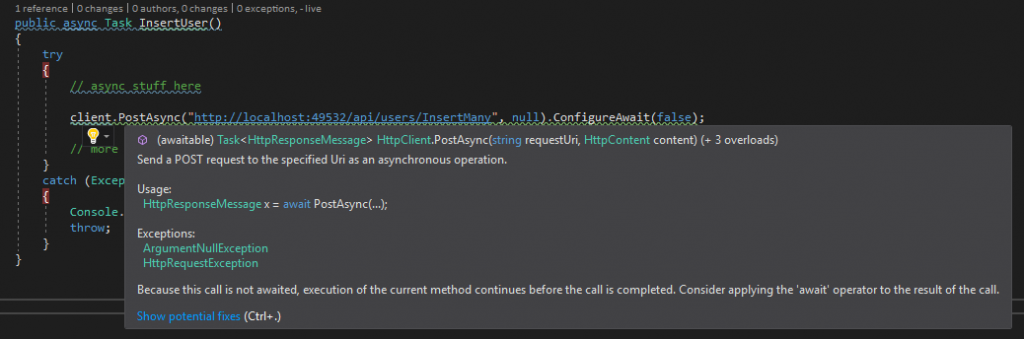

Easier to write code

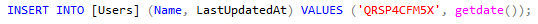

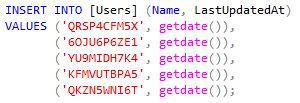

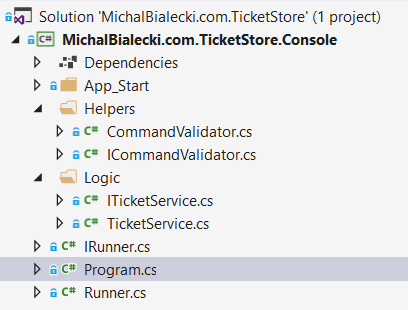

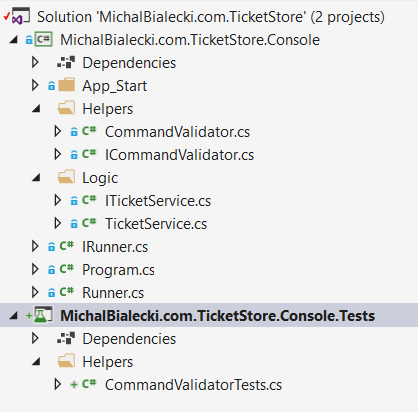

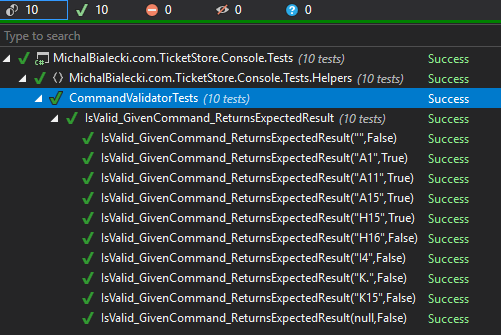

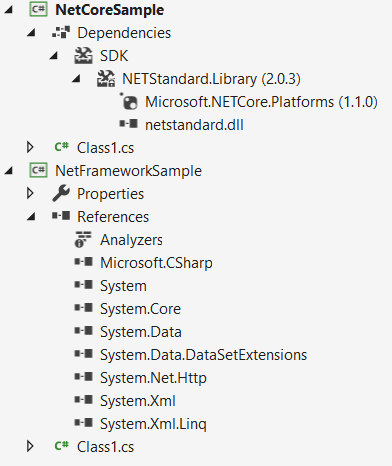

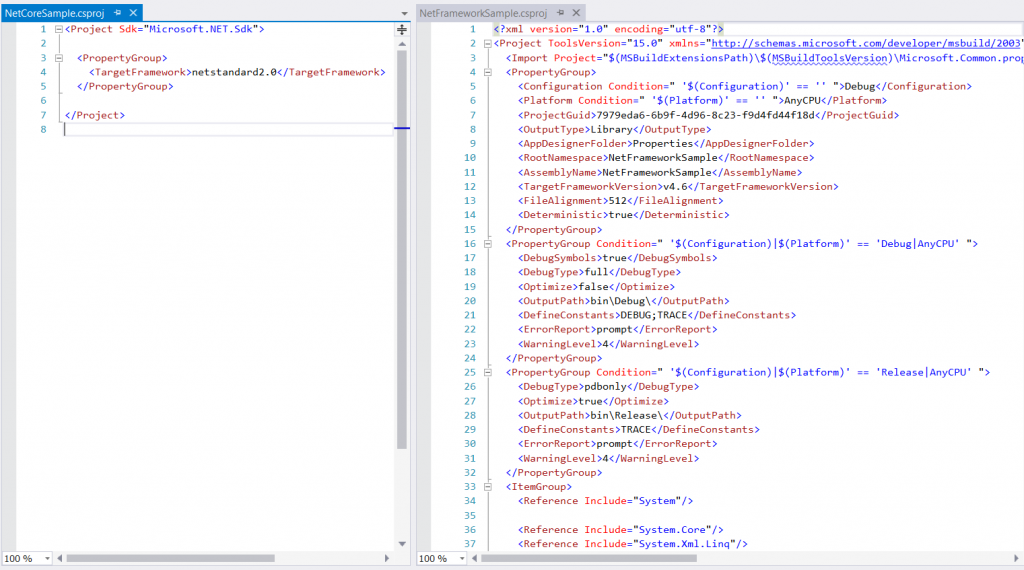

The biggest difference between .Net Framework and .Net Core is a project file. Instead of ProjectName.csproj in a XML format we now have much more compact ProjectName.json in JSON. I created two sample projects, one in .Net Core and one in .Net Framework, have a look:

As you can see – there are fewer dependencies in .NET Core. The project file looks like this:

A .Net Core project file only specified a target framework, nothing more is needed. This is an example of the simplest class library created with Visual Studio. When the project gets bigger, this difference is getting more noticeable. Great comparison of those two formats can be found here.

Performance

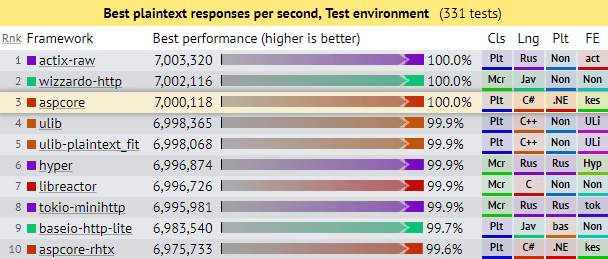

Since developers could build framework anew, they could test and tweak code, that supposes to be untouchable before. Also because of rebuilt request pipeline, .Net Core is really fast when it comes to handling HTTP requests. Take a look at this comparison between other hosting environments:

https://www.ageofascent.com/2019/02/04/asp-net-core-saturating-10gbe-at-7-million-requests-per-second/

You can see that ASP.NET is the 3rd fastest web server and can handle 7 million requests per second. This is impressive!

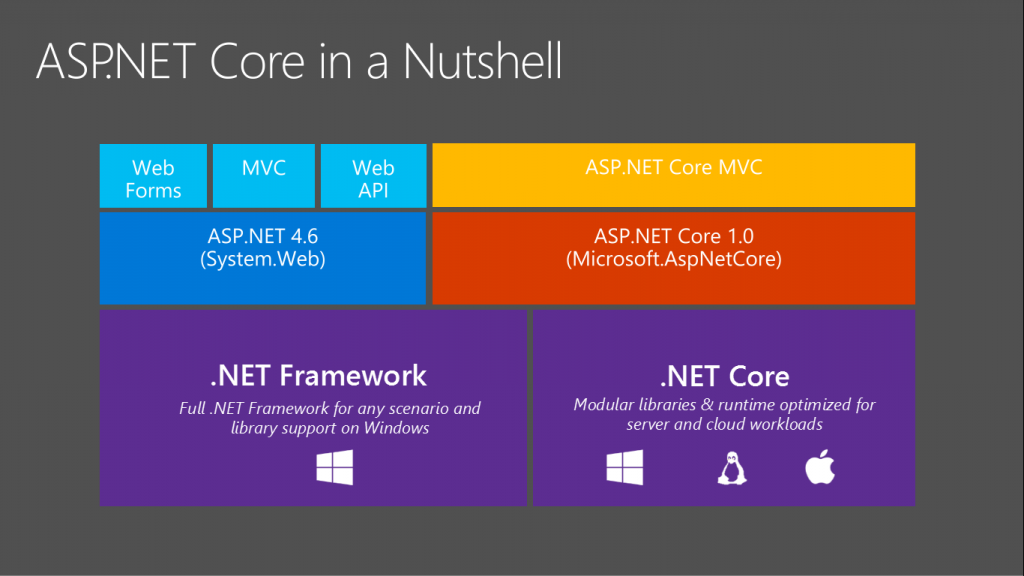

What is .Net Standard?

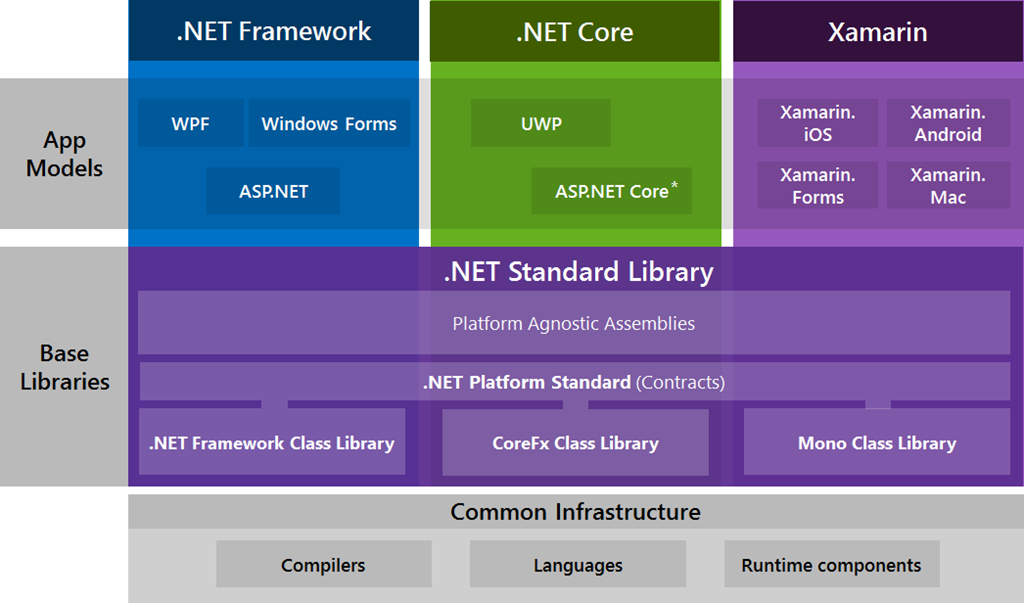

A .Net Standard is a specification for .Net Apis, that will be available across all platforms. Have a look at the lower part of this architecture:

https://gigi.nullneuron.net/gigilabs/multi-targeting-net-standard-class-libraries/

.Net Standard is implemented in .Net Framework, in CoreFx(.Net Core) and in Mono. That means that once you target your class library to .Net Standard it can be run on any platform, but you will have less Apis to choose from. However, if you target your library to .Net Core, you can use all the features of .Net Core, but it could be run only on .Net Core apps.

What to choose? In all my projects I’m targeting .Net Standard and for building standard back-end services it was always enough. From version 2.0 .Net Standard supports a lot of Apis and you shouldn’t have a problem making it work.

Can I use .Net Core from now on then?

Yes and no. I strongly recommend you to use it in your new projects, cause it has multiple advantages. However, porting some legacy libraries or websites can be a hassle. .Net Standard and .Net Core supports a lot of Apis, but not everything that was available in .Net Framework is now available. Also some 3rd party libraries will not be ported at all, so major change in your project might be needed. Some Microsoft libraries are already ported, some are still in progress and some are totally rewritten. This means that .Net Core is not backward compatible and porting libraries to it can be easy or not possible at all.

All in all using .Net Core is a step in the right direction, cause Microsoft puts a lot of work into its development.

Thanks for reading and have a nice day!