How to receive a request as an XML in ASP.Net MVC Controller?

This is a question that I got at my work when integrating with third-party service. MVC Controller is not ideal for such request handling, but that was the task I got, so let’s get to it. This is an XML that I need to accept:

<document> <id>123456</id> <content>This is document that I posted...</content> <author>Michał Białecki</author> <links> <link>2345</link> <link>5678</link> </links> </document>

I tried a few solutions with built-in parameter deserialization but none seem to work and finally, I went with deserializing a request in a method body. I created a helper generic class for it:

public static class XmlHelper

{

public static T XmlDeserializeFromString<T>(string objectData)

{

var serializer = new XmlSerializer(typeof(T));

using (var reader = new StringReader(objectData))

{

return (T)serializer.Deserialize(reader);

}

}

}

I decorated my DTO with xml attributes:

[XmlRoot(ElementName = "document", Namespace = "")]

public class DocumentDto

{

[XmlElement(DataType = "string", ElementName = "id")]

public string Id { get; set; }

[XmlElement(DataType = "string", ElementName = "content")]

public string Content { get; set; }

[XmlElement(DataType = "string", ElementName = "author")]

public string Author { get; set; }

[XmlElement(ElementName = "links")]

public LinkDto Links { get; set; }

}

public class LinkDto

{

[XmlElement(ElementName = "link")]

public string[] Link { get; set; }

}

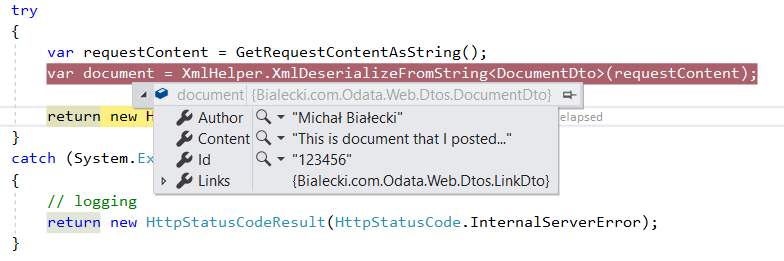

And used all of that in a controller:

public class DocumentsController : Controller

{

// documents/sendDocument

[HttpPost]

public ActionResult SendDocument()

{

try

{

var requestContent = GetRequestContentAsString();

var document = XmlHelper.XmlDeserializeFromString<DocumentDto>(requestContent);

return new HttpStatusCodeResult(HttpStatusCode.OK);

}

catch (System.Exception)

{

// logging

return new HttpStatusCodeResult(HttpStatusCode.InternalServerError);

}

}

private string GetRequestContentAsString()

{

using (var receiveStream = Request.InputStream)

{

using (var readStream = new StreamReader(receiveStream, Encoding.UTF8))

{

return readStream.ReadToEnd();

}

}

}

}

To use it, just send a request using for example Postman. I’m sending POST request to http://localhost:51196/documents/sendDocument endpoint with xml body mentioned above. One detail worth mentioning is a header. Add Content-Type: text/xml, or request to work.

And it works:

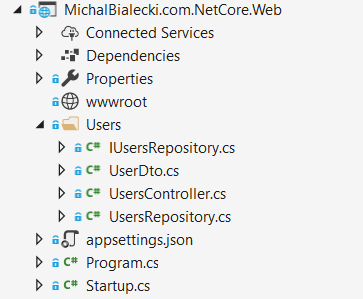

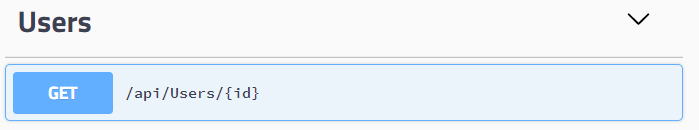

.Net Core API solution

While my task is solved I wondered how it should be solved if I could do it differently. My choice is obvious – use controller that has better API support and .Net Core. Document DTO will look the same, but deserialization is way simpler. Everything can be done with the help of the framework.

In Startup class in ConfigureServices method, you should have:

services

.AddMvc()

.AddXmlSerializerFormatters();

And my DocumentsController looks like this:

[Route("api/Documents")]

public class DocumentsController : Controller

{

[Route("SendDocument")]

[HttpPost]

public ActionResult SendDocument([FromBody]DocumentDto document)

{

return Ok();

}

}

And that’s it! Sending the same document to api/documents/SendDocument endpoint just works.

Can I accept both XML and Json in one endpoint?

Yes, you can. It does not require any change to the code posted above, it’s just a matter of formatting input data correctly. The same XML document from above will look in Json like that:

{

id: "1234",

content: "This is document that I posted...",

author: "Michał Białecki",

links: {

link: ["1234", "5678"]

}

}

I’m not sure why I couldn’t use built-in framework deserialization in MVC Controller class. Maybe I did something wrong or this class is just not made for such a case. Probably WebApi Controller would handle it much smoother.

All code posted here you can find at my GitHub repository: https://github.com/mikuam/Blog

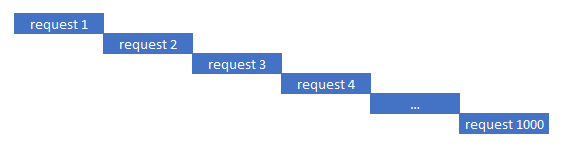

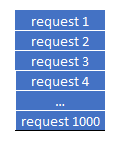

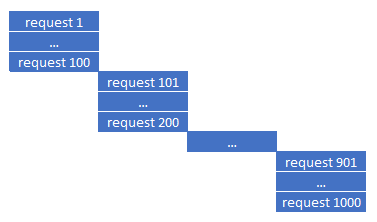

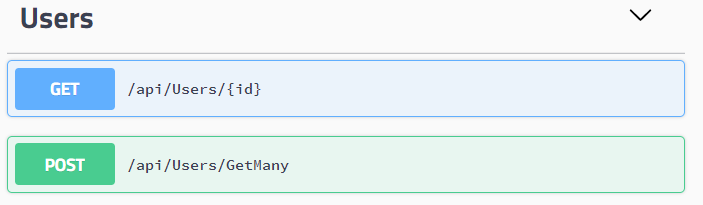

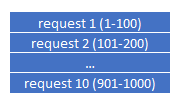

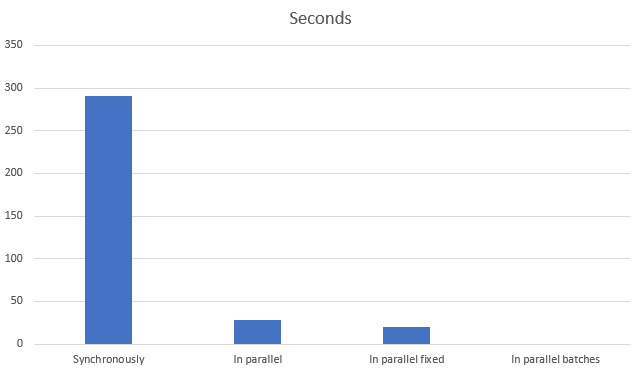

I wrote a nice post about parallel processing in .Net Core, you might want to have a look: https://www.michalbialecki.com/2018/04/19/how-to-send-many-requests-in-parallel-in-asp-net-core/