Microsoft Orleans is a developer-friendly framework for building distributed, high-scale computing applications. It is a perfect solution for processing a large amount of data quickly. It shows it strengths especially when you need to use a storage while processing the data because it keeps a state in memory so save or update state operations are very fast.

If you want to know more about Microsoft Orleans, read my previous post about it: https://www.michalbialecki.com/2018/03/05/getting-started-microsoft-orleans/

Getting started with Microsoft Orleans for .Net Core

Microsoft Orleans 2.0 is the version written in .Net Standard, that can be used by applications targeting both .Net Core and the full framework. You can have a look at its github repo here: https://github.com/dotnet/orleans.

There is also a very good Microsoft page with an updated documentation: https://dotnet.github.io/orleans/Documentation/2.0/New.html

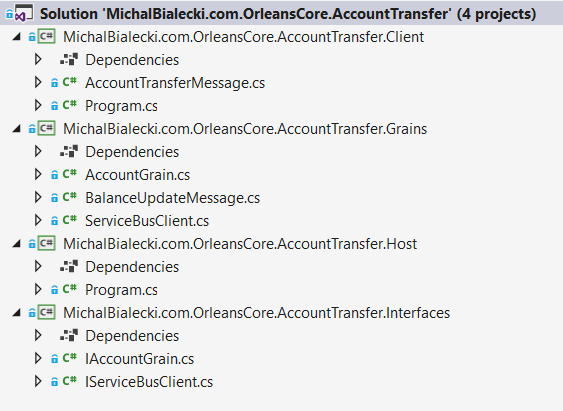

Regular Orleans solution consists of 4 projects: Grains – library with Orleans actor classes, Interfaces – abstraction for Grains to use in other libraries, Host – a project that runs a silos and a Client – project that connect to Host and execute clients code.

Have a look at the project structure, thanks to .Net Core it is simple and minimal.

Persistent storage in Microsoft Orleans

Microsoft Orleans offers a variety of options to save grain state. With one of the provided mechanisms, you can save grain state without writing any code, just providing proper configuration. You can also implement your own provider by implementing low-level interfaces. Here are some storage provider methods you can use when configuring silo host:

- AddMemoryGrainStorage – grain state will be kept in memory and probably will be lost when the machine is down or new version is deployed

- AddAzureBlobGrainStorage – Azure Blob storage will be used

- AddAzureTableGrainStorage – Azure Table API will be used, Cosmos DB Table API is also compatible

- AddAdoNetGrainStorage – ADO.Net storage in MSSQL database

- AddDynamoDBGrainStorage – Amazon AWS DynamoDB storage

Note that adding Blob and Azure Table extension methods is possible when Microsoft.Orleans.Persistence.AzureStorage package is installed. ADO.Net extension method is in the Microsoft.Orleans.Persistence.AdoNet package and DynamoDB extension method is in Microsoft.Orleans.Persistence.DynamoDB package.

If you want to save grain state, in a grain class you need to extend Grain<T> instead of Grain, where T is an application data type, that will be persistent. You also can set a storage provider name in a grain class like this, but if you don’t, then a default provider will be used.

[StorageProvider(ProviderName="AzureTable")] public class AccountGrain : Grain<Balance>, IAccountGrain

Read and write state in the grain

Grain state will be read automatically from storage provider when grain is activated and before OnActivateAsync() method is called. The grain is responsible for saving it’s state by calling base.WriteStateAsync() method. Orleans may perform performance optimizations and it is not guaranteed that state will be saved right after WriteStateAsync method is called. To be sure that grain uses the latest data from persistent storage, you can manually read data with base.ReadStateAsync() method.

Configuring CosmosDB Table API persistent storage

First I’ll extend an AccountGrain base class with Balance class, that will represent my state.

namespace MichalBialecki.com.OrleansCore.AccountTransfer.Grains

{

[Serializable]

public class Balance

{

public decimal Value { get; set; } = 0;

}

public class AccountGrain : Grain<Balance>, IAccountGrain

{

private readonly IServiceBusClient serviceBusClient;

public AccountGrain(IServiceBusClient serviceBusClient)

{

this.serviceBusClient = serviceBusClient;

}

async Task IAccountGrain.Deposit(decimal amount)

{

this.State.Value += amount;

await this.WriteStateAsync();

await NotifyBalanceUpdate();

}

async Task IAccountGrain.Withdraw(decimal amount)

{

this.State.Value -= amount;

await this.WriteStateAsync();

await NotifyBalanceUpdate();

}

Task<decimal> IAccountGrain.GetBalance()

{

return Task.FromResult(this.State.Value);

}

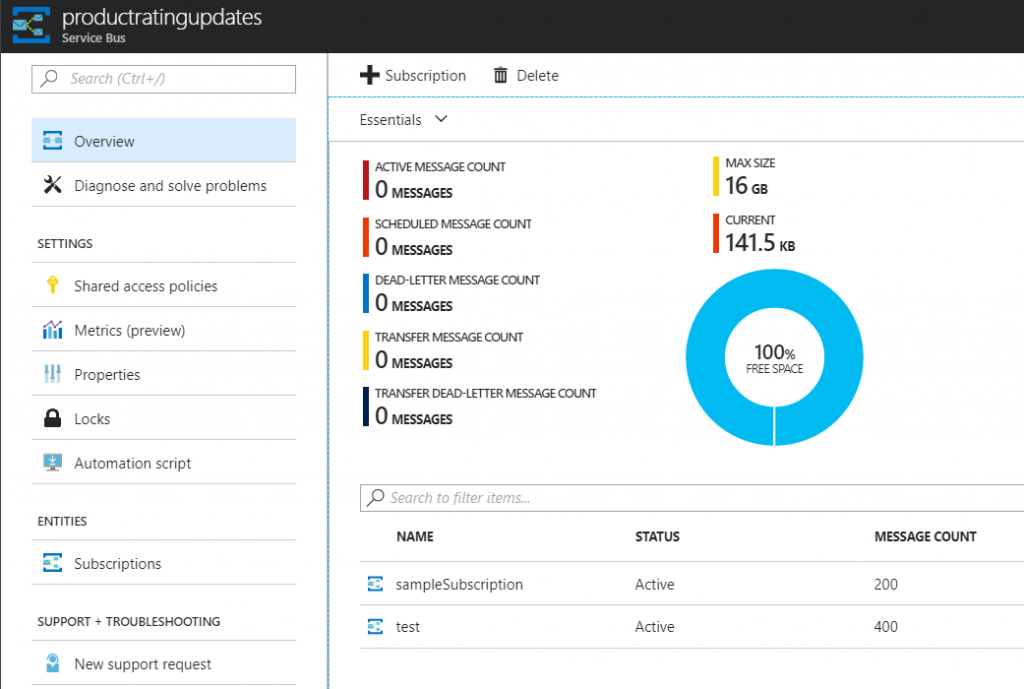

private async Task NotifyBalanceUpdate()

{

var balanceUpdate = new BalanceUpdateMessage

{

AccountNumber = (int)this.GetPrimaryKeyLong(),

Balance = this.State.Value

};

var message = new Message(Encoding.UTF8.GetBytes(JsonConvert.SerializeObject(balanceUpdate)));

await serviceBusClient.SendMessageAsync(message);

}

}

}

I’m using a NotifyBalanceUpdate method to send Service Bus message with an updated state. Notice that I save a state with this.WriteStateAsync() method after I update it.

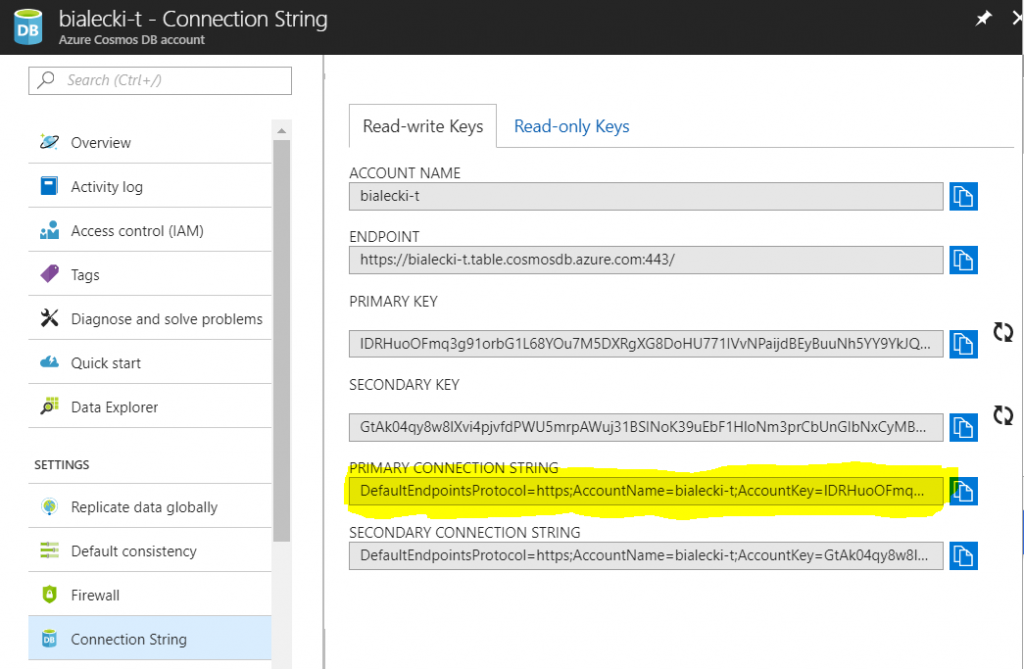

Next thing to do is set a right configuration in Host project Program.cs file.

private static async Task<ISiloHost> StartSilo()

{

var builder = new SiloHostBuilder()

.UseLocalhostClustering()

.Configure<EndpointOptions>(options => options.AdvertisedIPAddress = IPAddress.Loopback)

.ConfigureServices(context => ConfigureDI(context))

.ConfigureLogging(logging => logging.AddConsole())

.AddAzureTableGrainStorageAsDefault(

(options) => {

options.ConnectionString = CosmosBDConnectionString;

options.UseJson = true;

});

var host = builder.Build();

await host.StartAsync();

return host;

}

This is a very simple configuration, where I use AddAzureTableGrainStorageAsDefault extensions method and provide a connection string to CosmosDB Table API storage and a flag that I’d like data to be saved as json.

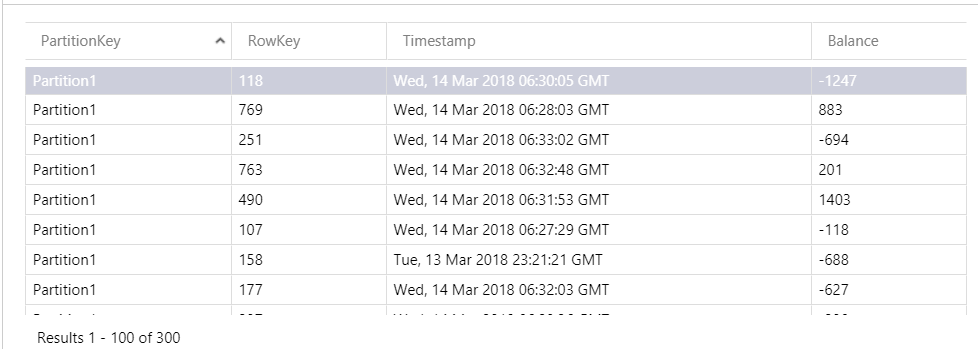

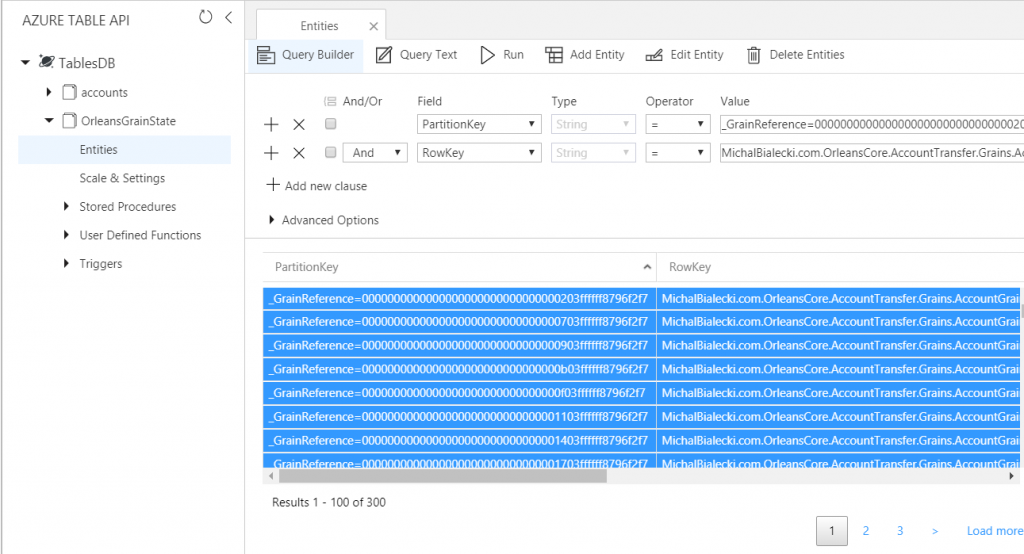

After running my application in Azure Portal I can see OrleansGrainState table, that was automatically created and this is what it contains:

You can read more about grain persistence in this Microsoft page: https://dotnet.github.io/orleans/Documentation/Core-Features/Grain-Persistence.html

All code that you saw is available at my GitHub repository: https://github.com/mikuam/orleans-core-example.