Recently I got my hands on NDepend, a static code analysis tool for .Net framework. Because it can work as a plugin for Visual Studio, it offers great integration with code and rapid results. So what it can do? Let’s see!

Getting started

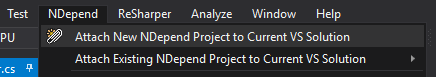

To start working with NDepend you need to download it and install for your Visual Studio. It’s not a free tool, but you can choose to start a 14-day trial period. Then you need to attach a new NDepend project.

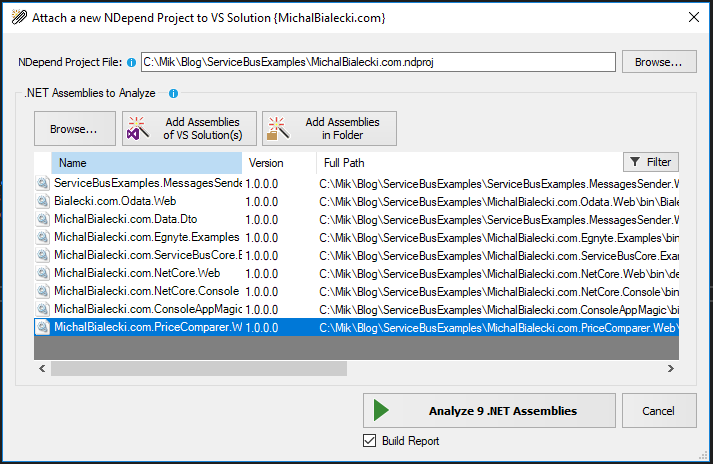

And choose assemblies to analyze.

Then wait a few seconds… and voila! You can see a dashboard with results or full HTML report.

What does it mean?

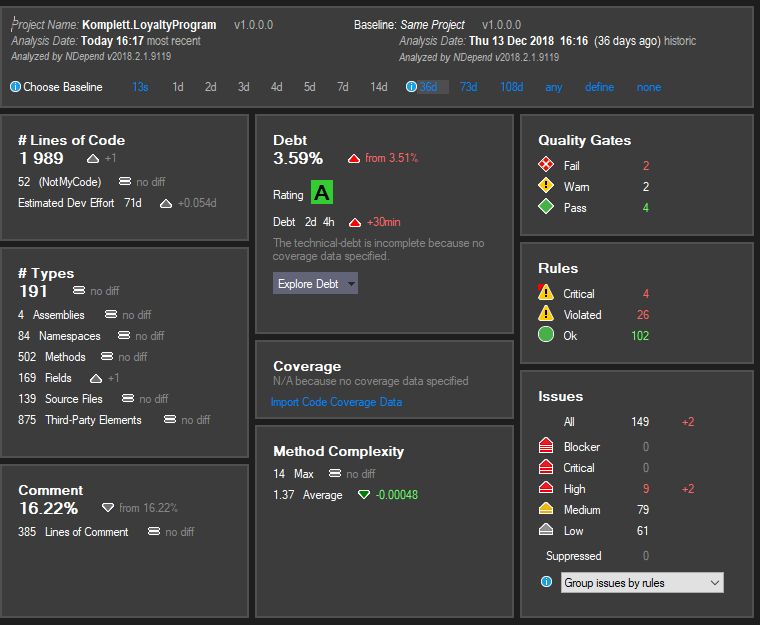

NDepend analyses code in many ways, checking if it is written in a correct way. In the top, you can see that it compared code to the one from 36 days and shows how it has changed. Let’s have a look at some of the results:

- Lines of Code – this is a number of logical lines of code, so code that contains some logic. Quoting the website: “Interfaces, abstract methods, and enumerations have a LOC equals to 0. Only concrete code that is effectively executed is considered when computing LOC”

- Debt – an estimation of the effort needed to fix issues. A debt-ratio is obtained by comparing the debt with an estimation of the effort needed for the development of the analyzed code, hence the percentage. Those are numbers that you can use to compare different versions of your app and also plan refactorings

- Types – apart from LOC, shows how big is your project and how big is the recent change

- Comment – comments code coverage

- Quality gates – overall check that should suggest that this code is good enough to proceed with it (for example to merge it)

- Rules – shows what rules are violated and their level

- Issues – problems with your code, that needs an attention

To clear this our – you might have 149 issues, that categorize into 30 failing rules and that can result in two main quality gates failure.

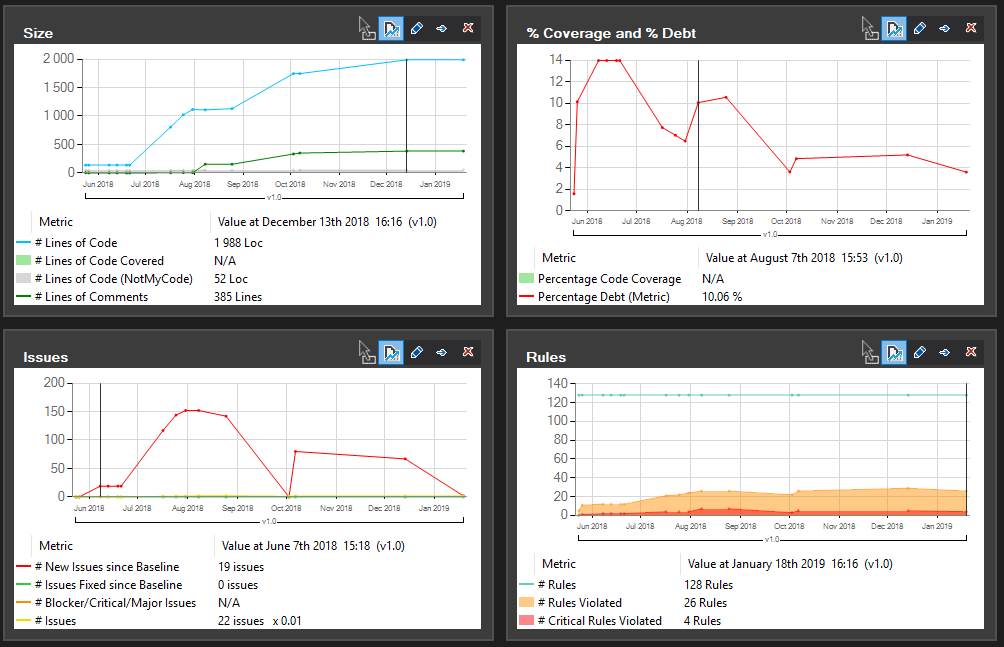

It’s getting more interesting if you analyze your project on a day to day basis and you start to notice some trends and effects of recent changes. This is an insight that you cannot measure even if you’re very well accustomed to the project. Let’s look at the analysis for a small micro-service over its whole life – a few months. You could see the dashboard already, so here are some charts.

You can see how the project is getting bigger and how the number of issues is rising and then dropping. This is a normal phase of software development, where you code first and fix bugs later. You can also see that we started to write comments after a few months of project development.

The report

Along with the analysis, NDepend created a full HTML report that presents event more data.

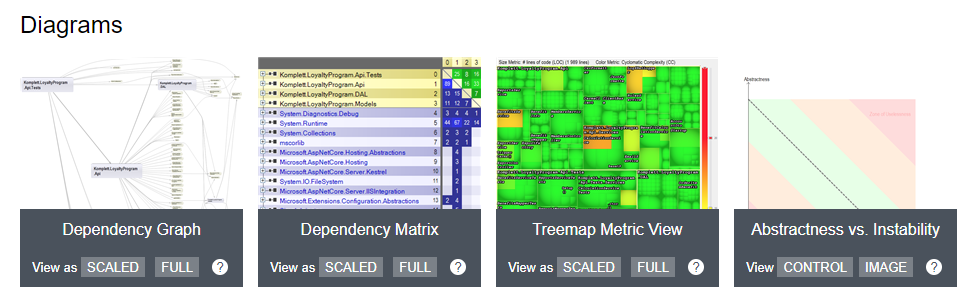

There are some useful diagrams:

- Dependency Graph – shows you how your assemblies are connected to each other

- Dependency Matrix – sums up all dependencies and you can easily see which assemblies have way too much responsibility

- Treemap Metric View – shows in a colorful way what namespaces have issues

- Abstractness vs. Instability – points what assembly in your solution might need an attention

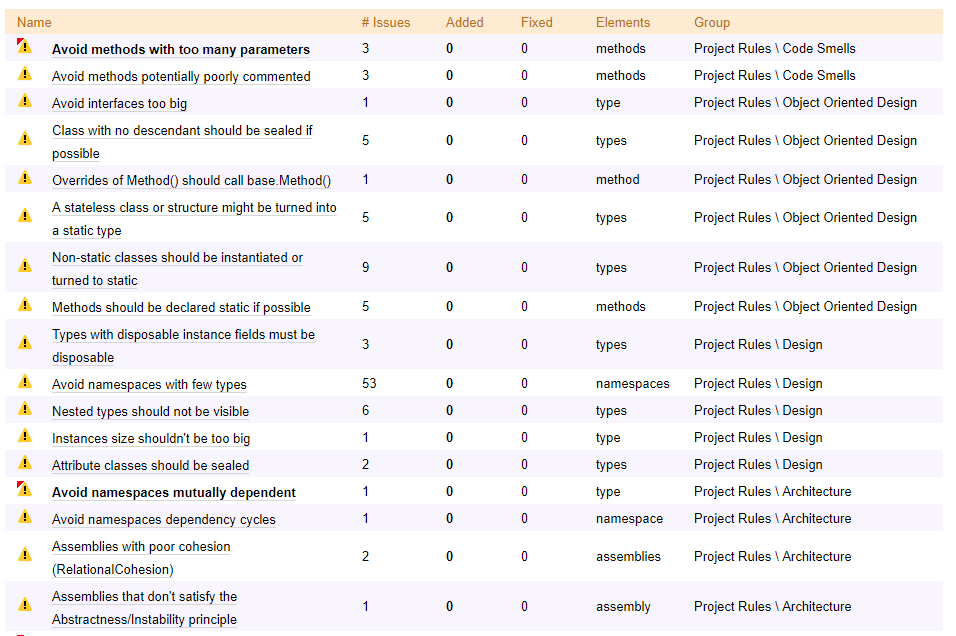

The report also lists rules that are violated by your app.

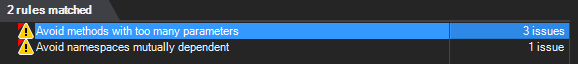

There are many rules that check our code. From Object-Oriented Programming and potential code smells to best practices in design and architecture. In Visual Studio you can see that same report as well and you can easily navigate to code that has problems. Let’s click on the first one: avoid methods with too many parameters.

Then we can go inside by double clicking to see places in the code that applies.

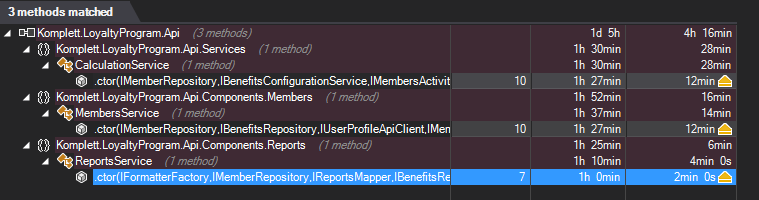

In this example, the constructor has too many parameters – 10 in this example. This might mean, that those classes have too much responsibility and might need refactoring. Also writing tests for it might be challenging.

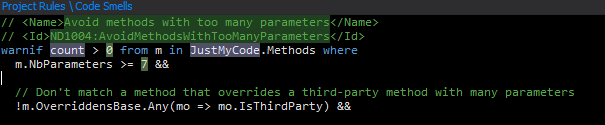

What is cool about NDepend is that every rule can be customized and edited, cause it is written in C#. We can have a quick look at this rule and check how many parameters in a method is good enough.

So 7 is the number here 🙂 Methods with 6 parameters are ok.

What is it for?

I showed you that this tool can analyze code and show some advanced metrics, but what I can use it for in my project?

I showed you that this tool can analyze code and show some advanced metrics, but what I can use it for in my project?

- Finds code smells – it’s ideal for identifying technological debt, that needs fixing. When planning a technical sprint, where you only polish the code, this tool will be perfect to spot any flaws

- It’s quick – you can easily analyze a new project and check if it is written following good practices

- Shows progress – you can track how you codebase quality changes while you’re changing the code

That last point surprised me. Some time ago I was making a quite big refactoring in a legacy project. I was convinced that deleting a lot of difficult code and introducing simpler, shorter classes will dramatically improve codebase quality. In fact, technological debt grew a little bit, because I didn’t follow all good practices. While old code seemed as unlogical and hard to debug, the new one is just easier to understand, because I wrote it.

It shocked me

I used NDepend on a project I’m proud of, which I was involved in from the beginning and I used a lot of time to make it as close to perfection as it was possible in a reasonable time. As a group of senior developers, we almost always find something during a code review, that could be done better or just can be discussed. I was sure that code is really good. How shocked I was when I found out, that NDepend didn’t fully agree. The project did get an A rating, but there were still a few things that could have been done better. And this is its real value.

Would I recommend it?

I need to admit, that I got an NDepend license for free to test it out. If it wasn’t for Patrick Smacchia from NDepend, I wouldn’t discover that anytime soon. And I would never learn, that no matter how good you get in your work, you always can do better.

I need to admit, that I got an NDepend license for free to test it out. If it wasn’t for Patrick Smacchia from NDepend, I wouldn’t discover that anytime soon. And I would never learn, that no matter how good you get in your work, you always can do better.

I recommend you to try NDepend yourself and brace yourself… you might be surprised 🙂

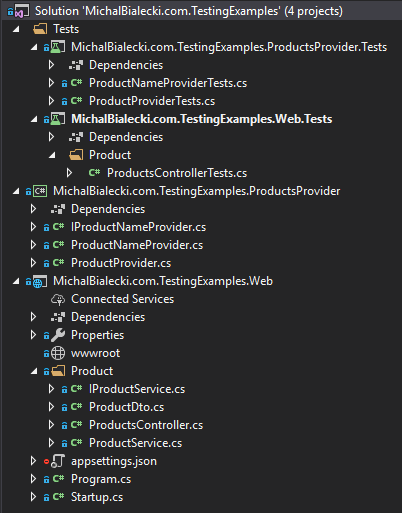

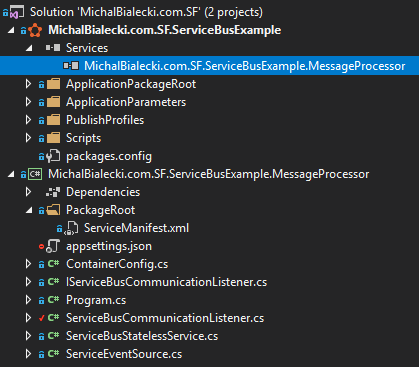

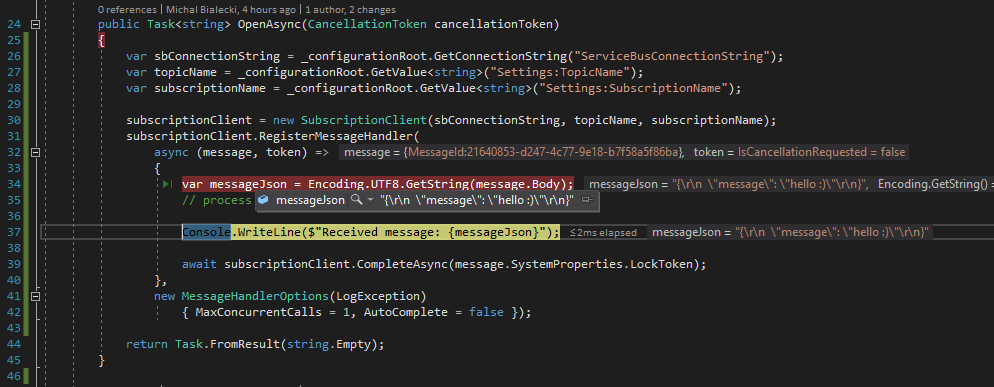

This is one of the proof of concent that I did at work. The idea was to add another Service Bus application to an existing solution, instead of starting a whole new micro-service. It was a lot faster just to add another .net core console application, but setting up Service Fabric cluster always brings some unexpected experiences.

This is one of the proof of concent that I did at work. The idea was to add another Service Bus application to an existing solution, instead of starting a whole new micro-service. It was a lot faster just to add another .net core console application, but setting up Service Fabric cluster always brings some unexpected experiences.

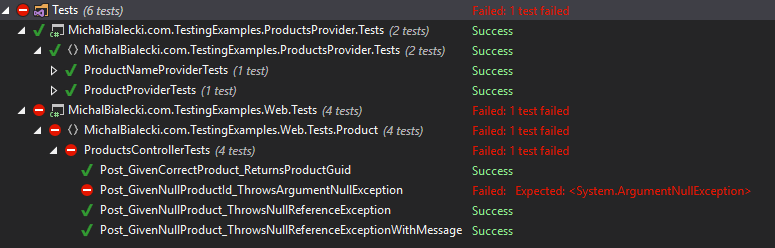

Imagine you are a Junior .Net Developer and you just started your development career. You got your first job and you are given a task – write unit tests!

Imagine you are a Junior .Net Developer and you just started your development career. You got your first job and you are given a task – write unit tests!

Important thing is to follow patterns that are visible in your project and stick to it. Naming things correctly might sound obvious and silly, but it’s crucial for code organization and it’s visibility.

Important thing is to follow patterns that are visible in your project and stick to it. Naming things correctly might sound obvious and silly, but it’s crucial for code organization and it’s visibility.