For some time now we can observe how new .Net Core framework is growing and become more mature. Version 2.0 was released in August 2017 and it is more capable and supports more platforms then it’s previous releases. But the biggest feature of this brand new Microsoft framework is its performance and it’s ability to handle http requests much faster then it’s bigger brother.

However introduction of new creation is only the beginning as more and more packages are being ported or rewritten to new, lighter framework. This is a natural opportunity for developers to implement some additional changes, refactor existing code or maybe slightly simplify existing API. It also means that porting existing solutions to .Net Core might not be straightforward and in this article I’ll check how receiving messages from Service Bus looks like.

First things first

In order to start receiving messages, we need to have:

- Azure Service Bus subscription – you can read here how to get one

- Create a Service Bus namespace, topic and subscription

- Send messages to created topic – this post explains how you can do it

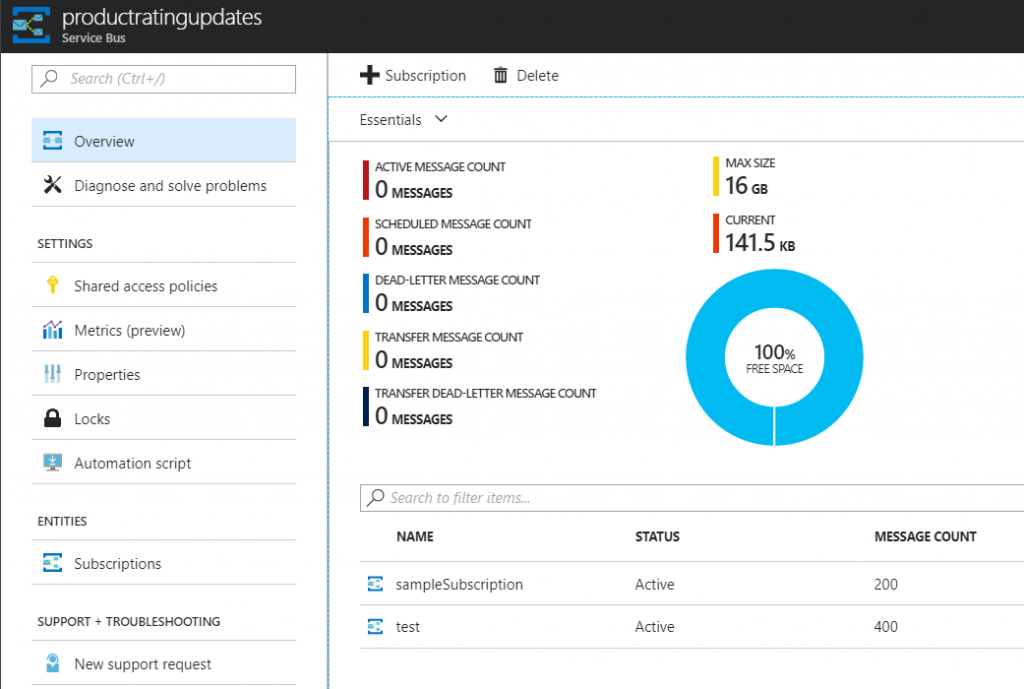

After all of this, my topic looks like this:

Receiving messages

To demonstrate how to receive messages I created a console application in .Net Core 2.0 framework. Then I installed Microsoft.Azure.ServiceBus (v2.0) nuget package and also Newtonsoft.Json to parse messages body. My ProductRatingUpdateMessage message class looks like this:

public class ProductRatingUpdateMessage

{

public int ProductId { get; set; }

public int SellerId { get; set; }

public int RatingSum { get; set; }

public int RatingCount { get; set; }

}

All of the logic is inside MessageReceiver class:

public class MessageReceiver

{

private const string ServiceBusConnectionString = "Endpoint=sb://bialecki.servicebus.windows.net/;SharedAccessKeyName=RootManageSharedAccessKey;SharedAccessKey=[privateKey]";

public void Receive()

{

var subscriptionClient = new SubscriptionClient(ServiceBusConnectionString, "productRatingUpdates", "sampleSubscription");

try

{

subscriptionClient.RegisterMessageHandler(

async (message, token) =>

{

var messageJson = Encoding.UTF8.GetString(message.Body);

var updateMessage = JsonConvert.DeserializeObject<ProductRatingUpdateMessage>(messageJson);

Console.WriteLine($"Received message with productId: {updateMessage.ProductId}");

await subscriptionClient.CompleteAsync(message.SystemProperties.LockToken);

},

new MessageHandlerOptions(async args => Console.WriteLine(args.Exception))

{ MaxConcurrentCalls = 1, AutoComplete = false });

}

catch (Exception e)

{

Console.WriteLine("Exception: " + e.Message);

}

}

}

Notice that creating a SubscriptionClient is very simple, it takes just one line and handles all the work. Next up is RegisterMessageHandler which handles messages one by one and completes them in the end. If something goes wrong message will not be completed and after lock on it will be removed – it will be available for processing again. If you set AutoComplete option to true, then message will be automatically completed after returning from User Callback. This message pump approach won’t let you handle incoming messages in batches, but parameter MaxConcurrentCalls can be set to handle multiple messages in parallel.

Is it ready?

Microsoft.Azure.ServiceBus nuget package in version 2.0 offers most desirable functionality. It should be enough for most cases but it also has huge gaps:

- Cannot receive messages in batches

- Cannot manage entities – create topics, queues and subscriptions

- Cannot check if queue, topic or subscription exist

Especially entities management features are important. It is reasonable that when scaling-up a service that read from topic, it creates it’s own subscription and handles it on it’s own. Currently developer needs to go to Azure Portal to create subscriptions for each micro-service manually.

Update!

Version 3.1 supports entities management – have a look at my post about it: Managing ServiceBus queues, topics and subscriptions in .Net Core

If you liked code posted here, you can find it (and a lot more) in my github blog repo: https://github.com/mikuam/Blog.